How a New QA Professional Can Use the 11-Day Plan to Find Defects

If you’re just starting out in agent QA, here’s how to apply each day’s focus to systematically uncover issues:

The rapid evolution of artificial intelligence is creating new career opportunities, with both technical and non-technical roles rising in demand. Here’s a snapshot of the most in-demand AI roles projected for the future:

| Role | Description |

|---|---|

| AI Engineer | Designs and maintains AI systems |

| Prompt Engineer | Crafts textual prompts for generative AI |

| AI Content Creator | Creates AI-enabled digital content |

| Data Scientist | Analyzes and interprets complex data with AI |

| NLP Engineer | Develops AI that understands language |

| AI Product Manager | Leads building and launching new AI products |

| AI Ethicist | Governs ethical AI practices |

| Digital Twin Engineer | Builds virtual models of physical systems with AI |

| Human-AI Interaction Designer | Designs seamless experiences between users and AI |

| Data Annotator | Labels data for AI model training |

AI’s job market is rapidly evolving, with employers now prioritizing both technical mastery and human-centered skills. Whether technical or creative, countless opportunities exist for those ready to adapt, specialize, and upskill in this dynamic field26103.

Remember that the most successful transitions leverage your existing knowledge while strategically adding new AI skills. Focus on the natural bridge between your current expertise and your target AI role for the smoothest transition.

As AI and automation continue to redefine how industries operate, IT professionals around the world face a stark choice: adapt or become obsolete.

This article outlines the key challenges in the current tech job landscape—and a solution that goes beyond certifications: proving your competence through real-world projects and demos. Based on the talk “Do You Want Competent AI Job Offers Globally?” by vskumarcoaching.com, this is your guide to building a future-proof AI career.

With AI tools evolving every 3–6 months, most skills have a short shelf life. Without ongoing learning, even seasoned professionals fall behind.

Companies are moving rapidly into machine learning, data science, and GenAI projects—meaning roles that used to be routine now require deeper tech fluency.

HR teams often can’t distinguish between genuine experience and fabricated resumes. The result? Unskilled hires, project failures, and trust erosion.

Professionals are expected to learn and deliver simultaneously, often working 14–16 hours per day. But very few receive structured guidance on how to upgrade their skills effectively.

Shanti Kumar V’s approach at vskumarcoaching.com is simple but powerful:

“Don’t just learn. Build, show, and prove your skills.”

Every learner gets a personalized plan based on:

Participants complete real tasks weekly, guided by mentors. These are recorded as demos—your proof of work. No fluff. Just real, job-level experience.

You’ll work with:

This isn’t a lab simulation—it’s what real project teams do.

No more theoretical fluff. You build job-relevant skills that match actual hiring needs in AI roles.

Instead of listing courses, you’ll show:

These become your talking points in interviews.

Whether you’re aiming for promotion, a company switch, or a complete career change, you’ll have the tools (and confidence) to do it.

If you ever face layoffs or need to switch jobs, you won’t panic. With weekly demos and updated skills, you’ll attract multiple offers.

By the end of the program, you’ll be confident in:

✅ Certifications get you noticed. Real work gets you hired.

In the AI-driven job market, what matters is:

Start now. Build your AI career with confidence and clarity.

🔗 Book your call at vskumarcoaching.com

For detailed curriculum:

Watch the following video which has the detailed analysis on the risk of facing fabricated files in projects, for the Individuals and Companies:

Powered by: vskumarcoaching.com

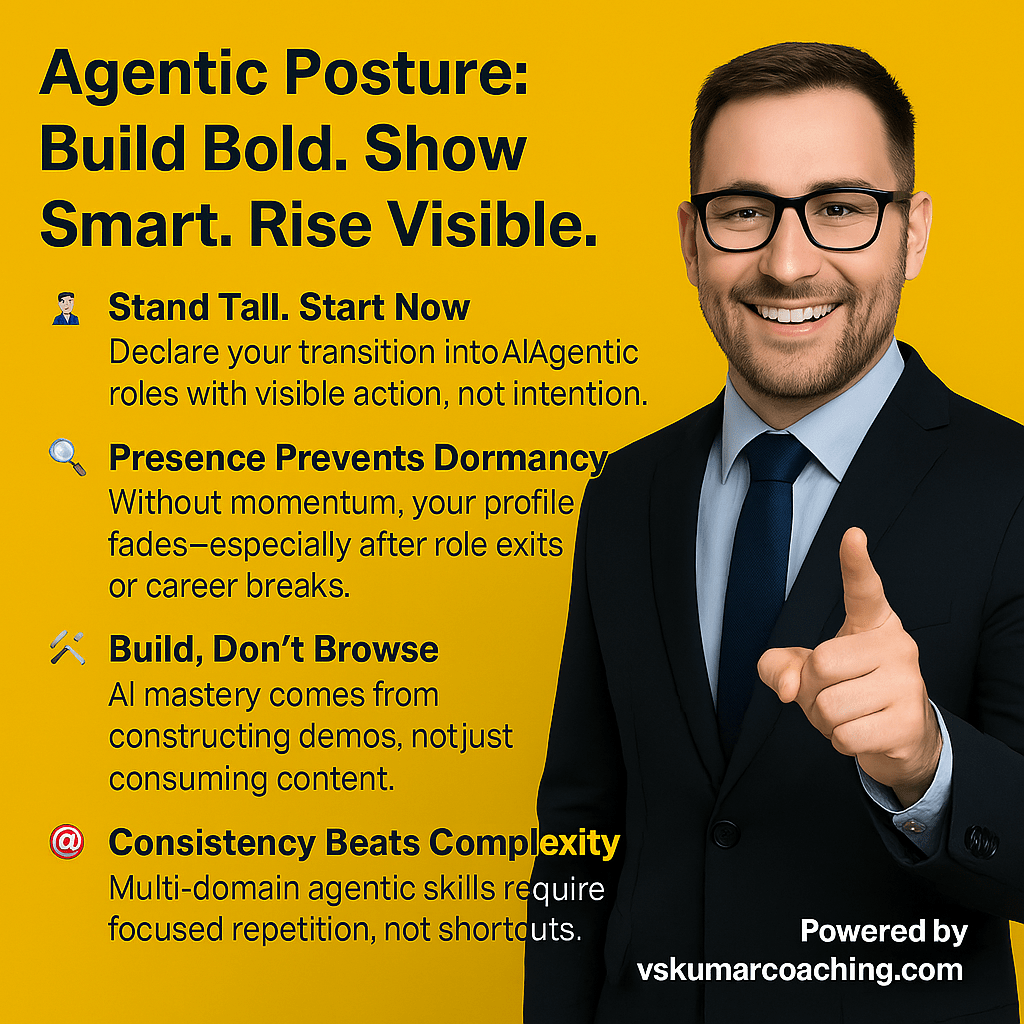

In a market flooded with AI bootcamps, certificate showcases, and LinkedIn buzzwords, one truth remains: credibility can’t be manufactured—it must be demonstrated. The Agentic AI Scaling-Up Program was born from this very reality. Led by Shanthi Kumar V, it delivers a transformational shift for professionals who want to showcase proof of value—not just proof of attendance.

Today, AI-related profiles are going viral for reasons that have little to do with execution:

These profiles may generate attention—but they rarely survive technical scrutiny, recruiter filters, or client walkthroughs. Recruiters now ask:

“Can you walk me through a live demo involving fallback handling, chaining logic, or domain-specific orchestration?”

Most viral resumes collapse under that question. Why? Because visibility without substance is short-lived.

This program isn’t a theory lab—it’s a launchpad for experiential credibility.

Every deliverable becomes a reflection of readiness—not intention.

This program equips learners to move from self-proclaimed readiness to recruiter-proof mastery. You don’t just learn about agentic AI—you execute it.

Multi-role capabilities aren’t built on the fly. They’re built project by project, through intentional coaching, energy alignment, and iterative delivery.

You can build a profile based on certificates. Or a portfolio based on outcomes.

You can go viral for keywords. Or get hired for proof.

If you’re ready to activate clarity, construct demos, and rise with presence—join the Agentic AI Scaling-Up Program today.

🔗 Explore more at vskumarcoaching.com

📩 For direct guidance or to discuss your AI transition/counseling call personally, DM Shanthi Kumar V on LinkedIn:

🔗 linkedin.com/in/vskumaritpractices

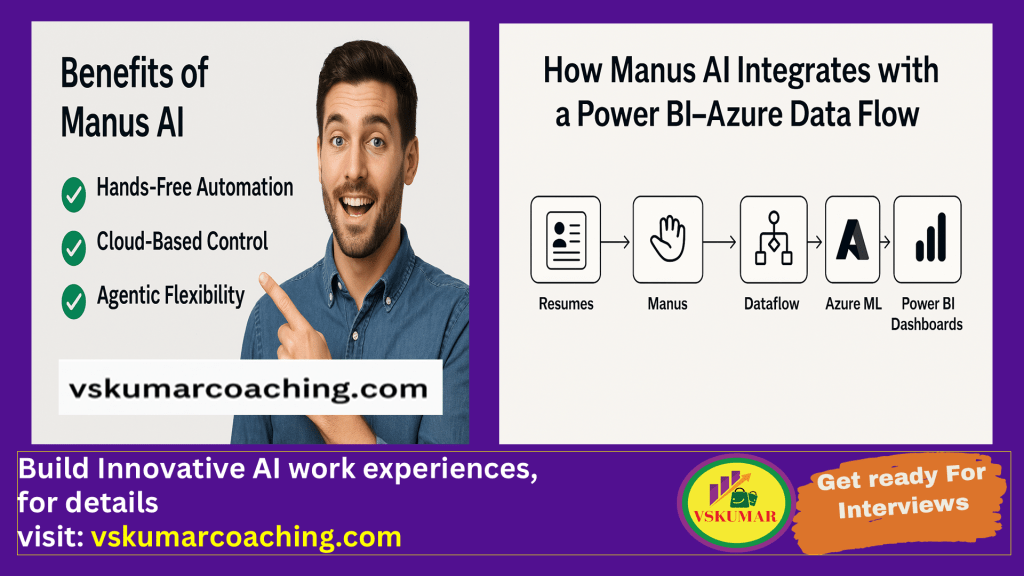

In a typical Power BI–Azure data flow, you orchestrate data ingestion, transformation, modeling, and visualization using various Azure services. Manus AI enters the picture not as a data tool—but as an agentic orchestrator that automates the entire sequence with minimal intervention.

Let’s break it down step-by-step.

First, you might have raw resumes stored in email attachments or OneDrive folders. Manus can autonomously browse cloud directories, unzip and classify files, and identify resumes tagged for healthcare IT roles. It doesn’t just retrieve them—it can pre-process metadata, like extract candidate names or filter by experience.

Next, Manus could trigger an Azure Data Factory pipeline to load this data into Azure Data Lake. If there’s a schema mismatch or a broken flow, Manus can detect and attempt corrective steps—like reformatting fields or restarting specific activities.

From there, the cleaned data enters an Azure Machine Learning pipeline. Manus handles model invocation, passing in resumes to generate prediction scores for job fit. It can evaluate the output against thresholds, flag anomalies, and update logs.

Once scoring is complete, Manus updates Power BI dashboards. Instead of manually configuring datasets or applying filters, Manus could dynamically adjust the dashboard—perhaps applying a skill-based filter, adding annotations, or even regenerating visuals if data trends shift.

Finally, Manus could be the trigger for action. Let’s say a candidate scores above 75. Manus can instantly activate your batch ADB–VLC script to place voice calls or send outreach messages, without requiring you to monitor the dashboards in real time.

What makes Manus unique here is its ability to manage this full cycle—from sourcing to scoring to outreach—autonomously, using its sandboxed tool ecosystem. You assign the job once, and it runs with it, verifying outputs and recovering from failures on its own.

It’s like having a silent teammate who understands your cadence, follows your energy, and completes each ritual with precision.

If you’d like, I can draft an action flow or simulate a Manus prompt for your July micro-project. Just let me know what angle you’d like to explore—resume agent, dashboard alerting, outreach orchestration, or something more spiritual-tech blended.

====== Read this ====>

Hello, and greetings!

Are you considering a transition into AI or GenAI roles?

🚀 It’s not just about certifications—it’s about building real, hands-on experience that shows you can solve practical, business-relevant problems.

🎥 Watch this 18-min explainer:

👉 How to Build Experience for GenAI Roles

🔧 How is experience built—even before you’re hired?

Through immersive coaching and guided projects that simulate real job scenarios. You’ll:

Warm regards,

Shanthi Kumar V

===================================>

You can showcase them well. This can help you to get the Competent Job also.

Building an application using quantum computing for bioinformatics, specifically for genomics systems, involves integrating quantum algorithms to handle complex biological data more efficiently than classical computers. Here’s a structured approach to help you get started:

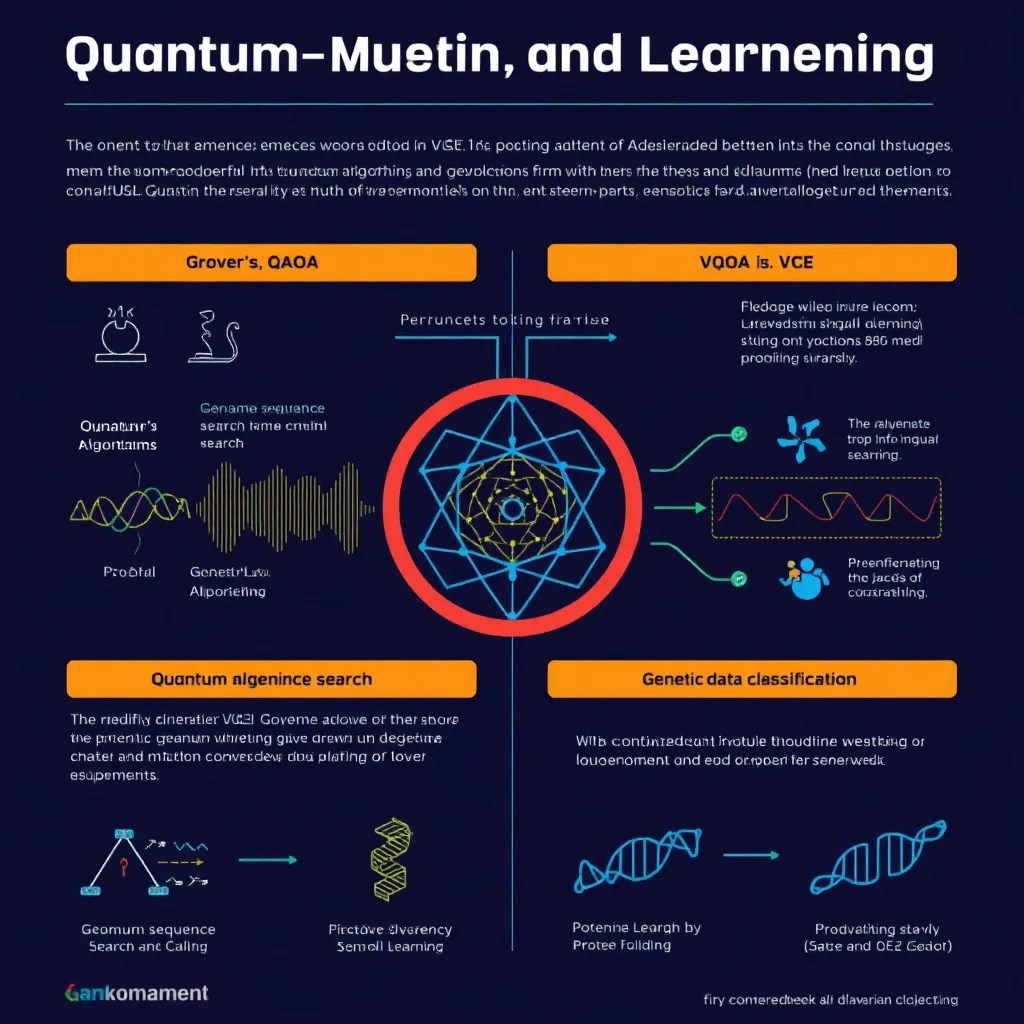

Quantum algorithms are powerful tools that can potentially transform bioinformatics by tackling problems too complex for classical computers. Here’s an explanation of the main quantum algorithms used or considered in bioinformatics, focusing on genomics systems:

The complexity of genomic data—such as sequence length, mutation variety, and interaction networks—means classical computers face scaling challenges. Quantum algorithms can handle massive combinatorial spaces and optimization problems far more efficiently, potentially enabling breakthroughs in personalized medicine, genetic disease research, and drug discovery.

If you’d like, I can create an infographic illustrating these algorithms with their bioinformatics applications for better visualization!

Quantum machine learning (QML) holds great promise in genomics by leveraging quantum computing’s ability to process complex and high-dimensional data more efficiently than classical ML algorithms. Here’s how QML can be applied in genomics:

As research progresses and quantum hardware scales, QML could transform personalized medicine, enabling faster and more precise genomic analyses.

Here’s the updated blog version including the note that Shanthi Kumar V covered Tutorials 1 & 2, in this session. At the Bottom of this blog you can see the discussion video also.

by Shanthi Kumar V

Shanthi Kumar V recently delivered an engaging 11-day tutorial series on implementing SDKs in DevOps, with a strong focus on cloud cost automation. In the first two tutorials, Shanthi covered foundational topics including cloud cost automation and safer Infrastructure as Code (IaC) validation. Through practical, real-world case studies, she showcased how teams can leverage diverse tools and APIs to optimize cloud spending and boost operational efficiency.

A standout topic was Infrastructure as Code (IaC) validation and automation, particularly in AWS environments. The sessions highlighted how automated agents can proactively scan and validate IaC scripts before deployment, significantly reducing errors and enhancing security compliance. The final discussions contrasted manual and automated IaC validation, emphasizing the considerable cost savings and productivity improvements gained through intelligent automation.

During the comprehensive 11-day tutorial series, Shanthi Kumar V shared valuable insights grounded in Agentic DevOps principles, demonstrating how organizations can automate and optimize cloud cost management. Using tools like AWS Cost Explorer APIs, Terraform, Python scripting, and serverless architectures, companies can continuously monitor budgets and automate the detection and removal of unused cloud resources. For example, a SaaS provider achieved a 30% cut in AWS expenses through auto-scaling agents that also enhance resource tagging for improved cost attribution.

The series also covered advanced IaC security automation. AI-driven agents that automatically validate Infrastructure as Code before deployment led to notable security benefits—with an enterprise reporting a 40% reduction in incidents within three months of implementation. The tutorial’s conclusion underscored how automated IaC validation dramatically outperforms manual processes, delivering reduced errors, stronger compliance, and time saved.

This tutorial series is an essential guide for DevOps professionals seeking to implement intelligent automation, boost infrastructure security, and reduce cloud operating costs effectively.

==== NOTE For you ===>

Hello, and greetings!

Are you considering a transition into AI or GenAI roles?

🚀 It’s not just about certifications—it’s about building real, hands-on experience that shows you can solve practical, business-relevant problems.

🎥 Watch this 18-min explainer:

👉 [How to Build Experience for GenAI Roles](https://youtu.be/gGaHSd47sas?si=tkQxqr5QbQR3lXqL)

🔧 How is experience built—even before you’re hired?

Through immersive coaching and guided projects that simulate real job scenarios. You’ll:

– ✅ Build intelligent agent solutions across diverse domains

– ✅ Automate business workflows using Python through prompt-driven logic

– ✅ Deliver a full-scale data analytics project with AI-powered decision-making

– ✅ Learn how to document, review, and present your work confidently

🧩 Each project mirrors tasks performed in actual AI roles—so you graduate with portfolio-backed credibility.

👩💼 See how others did it:

– [Srivalli’s AI profile](https://www.linkedin.com/in/srivalliannamraju/)

– [Ravi’s AI transition (Non-IT)](https://www.linkedin.com/in/ravikumar-kangne-364207223/)

Also, see this pdf from linkedin to get some more clarity:

https://www.linkedin.com/posts/vskumaritpractices_how-to-survive-in-it-from-legacy-background-activity-7351126206471159810-mEQz?utm_source=share&utm_medium=member_desktop&rcm=ACoAAAHPQu4Bmxexh4DaroCIXe3ZKDAgd4wMoZk

If you’re serious about growing into AI careers, this is your signal to start doing—not just learning.

Warm regards,

Shanthi Kumar V

Big Data infrastructure (like distributed storage and high-throughput processing) is the backbone that enables agentic AIs to learn, adapt, and act on real-world data flows—automatically delivering value across domains.

These platforms are at the frontier of using agentic AI in big data scenarios, enabling organizations to go beyond traditional automation into genuine AI-powered autonomy and adaptability at scale.

Cost Management

Running agentic AI at the scale required for big data typically involves significant cloud or hardware investments. Optimizing for cost-effectiveness while maintaining performance and reliability is an ongoing dilemma.

Data Integration & Quality

Big data environments often involve siloed, inconsistent, or incomplete data sources. Agentic AI systems require continuous access to high-quality, unified, and well-labeled data to function autonomously, so poor integration can impair learning and decision-making.

Scalability & Resource Management

Agentic AI models are typically computationally intensive. Scaling them to handle real-time streams or petabyte-scale datasets demands advanced orchestration of compute, storage, and network resources—often pushing the limits of current infrastructure.

Explainability & Trust

As agentic AI systems make increasingly autonomous decisions, stakeholders must understand and trust their actions, especially when they impact critical business processes. Maintaining interpretability while optimizing for autonomy remains a key challenge.

Security & Privacy

Big data often includes sensitive or proprietary information. Autonomous AI agents must be designed to rigorously respect security and privacy requirements, avoiding accidental data leaks or misuse, even while they operate with reduced human oversight.

Governance & Compliance

Ensuring agentic AI adheres to relevant industry regulations (e.g., GDPR, HIPAA) in big data contexts is complex, especially since autonomous systems might encounter edge cases not foreseen by human designers.

Bias & Fairness

Agentic AI can amplify biases present in big data sources if not carefully managed. Detecting, auditing, and correcting for bias is harder when AI agents make self-directed decisions on ever-evolving datasets.

System Robustness

Autonomous agents interacting with dynamic big data may encounter scenarios outside their training distribution. Systems must be resilient and capable of fail-safes to prevent cascading errors or unintended outcomes.

Organizations can strategically mitigate the challenges of deploying agentic AI in big data environments by adopting a mix of technical and organizational best practices:

UnitedHealth Group – Personalized Healthcare Analytics

UnitedHealth Group harnesses agentic AI to process vast volumes of patient and clinical data. Its AI agents autonomously tailor treatment plans, identify patient risks, and deliver actionable population health insights, improving care quality and outcomes.

Amazon – Autonomous Supply Chain Optimization

Amazon uses agentic AI to autonomously coordinate its massive supply chain operations. By analyzing big data from order patterns, shipping networks, and inventory levels, AI agents dynamically route packages, optimize warehouse workflows, and predict demand spikes, reducing delays and operational expenses.

JP Morgan Chase – Automated Financial Risk Analysis

JP Morgan’s COiN platform utilizes agentic AI to autonomously scan and extract information from millions of legal documents and financial transactions. The system ingests and analyzes big data to proactively identify risks and meet compliance standards far faster than human analysts could.

Siemens – Predictive Maintenance in Manufacturing

Siemens employs agentic AI agents connected to big data platforms that analyze sensor data from industrial equipment. These agents autonomously detect early failure signs, recommend maintenance, and order parts before breakdowns occur—cutting downtime and boosting productivity.

Let’s talk about how autonomous agents are quietly reshaping the foundations of IT delivery, infrastructure management, and cost control. The magic lies in applying agentic logic to traditional workflows… and watching inefficiencies melt away.

💰 Real-Time Cost Optimization with Autonomous Agents

Cloud bills too high? Agentic AI helps you take control.

🛠️ Infrastructure That Responds Intelligently

IaC is powerful. But pairing it with an agent makes it reactive.

♻️ Modular Agent Architecture = Speed + Reusability

Reusable agents save time.

🎨 Low-Code Tools Bring DevOps to Everyone

Not just for engineers anymore.

🎯 Smarter Model Usage = Lower Token Bills

Think smart, not large.

🧠 Governance that Thinks Ahead

Agents monitor themselves.

🧪 Sandboxing = Safer Automation

No surprises in production.

🔌 APIs = Agentic Superpowers

Agents need access — securely.

🔄 Career Continuity for DevOps Professionals

Agentic AI is your ladder, not your ceiling.

📊 Don’t Just Automate — Measure It

Track the impact:

🧱 Build Your Agent Stack: Workshop Style

Create reusable templates for CI/CD, observability, billing audits.

Let your coaching clients build their own agent libraries.

Turn theory into PoC. And PoC into portfolios.

🔚 Final Takeaway

Agentic AI is here, and it’s not about replacing DevOps — it’s about reimagining it.

With smart agents, modular design, and role evolution, DevOps is becoming leaner, more intelligent, and more impactful than ever.

Let’s coach the future, one agent at a time. 💡

The AI revolution in 2025 is being led by open tools. With Gemini 1.5 Pro via Google AI Studio (free tier), developers can build smart, efficient systems using frameworks like CrewAI, LangChain, LlamaIndex, and ChromaDB.

🧑 User Story:

As a job seeker, I want AI to tailor my resume to a job description to highlight skill gaps and improve structure.

🛠 Tools: CrewAI, Gemini 1.5 (via AI Studio), Replit (free IDE)

SkillMatcherAgent, RewriterAgent.🧑 User Story:

As a support manager, I want to auto-classify tickets and suggest replies based on FAQs.

🛠 Tools: LangChain, Gemini, Supabase (for storing FAQs), FastAPI

POST /ticket endpoint.🧑 User Story:

As a seller, I want weekly summaries of product trends from Reddit and news articles.

🛠 Tools: BeautifulSoup, LangChain, Gemini, Streamlit

r/Entrepreneur or r/Shopify + Google News headlines.summarize-reason-recommend chain using Gemini.🧑 User Story:

As a student, I want help understanding and debugging code line-by-line.

🛠 Tools: CrewAI, Gemini, Replit, Tabby (open-source Copilot alternative)

CodeExplainerAgent, BugFixerAgent.🧑 User Story:

As a creator, I want AI to generate video scripts and thumbnail ideas.

🛠 Tools: Gemini, LangChain, Canva CLI

🧑 User Story:

As a learner, I want adaptive quizzes that target my weak areas.

🛠 Tools: LlamaIndex, Gemini, Gradio

🧑 User Story:

As a seller, I want SEO-friendly product descriptions in multiple languages.

🛠 Tools: LangChain, LibreTranslate, Gemini, Flask

🧑 User Story:

As a reader, I want summaries of entire books or chapters.

🛠 Tools: PyPDF, LlamaIndex, Gemini, Streamlit

🧑 User Story:

As an employee, I want an AI to answer questions from company documents.

🛠 Tools: LlamaIndex, Haystack, Gemini, ChromaDB

🧑 User Story:

As a clinic receptionist, I want AI to ask patients about symptoms and suggest urgency level.

🛠 Tools: CrewAI, Gemini, FastAPI, MedPrompt Dataset

SymptomChecker, TriageAdvisor, EmergencyEscalator.You now have 10 agent-based AI solutions, all built using:

No GPT. No cost.

For 10 more uses case with solution, visit my linkedin article:

The FinTech Risk Scoring Engine is an AI-powered system designed to evaluate customer creditworthiness and detect fraudulent behavior in real-time using transactional and behavioral data. Built on Azure cloud services, the project follows an Agile methodology across 9 sprints, from POC data preparation to final demo and handover.

Its an ongoing Project with our coaching participants.

You can see the updates on their presentations in this blog.

A typical work planning discussion you can see initially before going to the project discussion: 6th July 2025

Modified Project planning discussion with Rahul on 18th July 2025:

In this video you can listen on the Project plan discussion and the model of the execution.

FinTech Risk score AI Model Initial plan Discussion with Rahul 6th July 2025, by vskumarcoaching.com:

Demos by Rahul Patil–>

The project plan discussion/demo by Rahul on 18th July 2025:

The project solution demo by Rahul on 22nd July 2025: