AI Landscape Briefing: Key Developments and Strategic Shifts

Executive Summary

The artificial intelligence sector is experiencing a period of intense and rapid evolution, characterized by a strategic battle for dominance in emerging markets, significant leaps in model capabilities, and the expansion of AI into physical and cognitive realms. Major technology corporations are engaged in a fierce competition for the Indian market, which has become a critical strategic battleground. OpenAI has made its premium ChatGPT Go plan free for one year in India, while Google is investing $15 billion in a massive AI facility, and Anthropic is establishing a major presence in Bengaluru.

Simultaneously, product innovation is accelerating. Google unveiled a suite of coordinated updates spanning quantum computing, robotics with internal monologue capabilities, and healthcare breakthroughs. Anthropic has expanded its ecosystem with a desktop application for Claude, introducing “Skills” and cloud-based coding to create a more integrated user experience, alongside a significantly cheaper and faster model, Haiku 4.5. OpenAI is shifting its focus towards action-oriented AI with the launch of its web agent, Atlas, and the acquisition of the team behind the on-screen assistant Sky.

This progress is mirrored by advancements in AI’s application in the physical world, exemplified by Figure AI’s mass-producible humanoid robot, Figure 03, designed for domestic work. In the cognitive domain, MIT’s Neurohat project demonstrates the first integration of an LLM with real-time brain data to create adaptive learning experiences. However, these advancements are accompanied by significant challenges, highlighted by a real-world incident where an AI security system misidentified a bag of chips as a gun, underscoring the critical need for human oversight. The overarching narrative raises a fundamental question about the future of work: whether AI will serve to augment human capability or automate it, making human roles indispensable or replaceable.

——————————————————————————–

1. The Strategic Battleground: India’s AI Market

India has emerged as the second-largest market for major AI companies after the United States, prompting an aggressive push for user acquisition and infrastructure development.

- OpenAI’s Market Saturation Strategy: OpenAI has made its premium “ChatGPT Go” plan, normally priced at 399 rupees per month, completely free for a full year in India, starting November 4th. This plan includes access to GPT-5 and offers 10 times the capacity for messages, image generation, and file uploads compared to the standard free tier. This move is a direct response to competitors, such as Perplexity’s partnership with Airtel and Google’s free Gemini Pro access for students, and aims to solidify OpenAI’s foothold in a market where its user base tripled in the last year.

- Google’s Foundational Investment: Google is undertaking a monumental infrastructure project in India, investing $15 billion to build its second-largest data center outside the U.S. in Visakhapatnam (Visag). In partnership with Adani Group and Bharti Airtel, the project is a 1-gigawatt AI facility designed as an entire campus, powered by 80% clean energy and connected by subsea cables. This investment is poised to create thousands of jobs and fundamentally impact India’s technology economy.

- Anthropic’s Expansion: Following the identification of India as the second-largest global user base for its model, Claude, in its 2025 economic index report, Anthropic is establishing a major presence in Bengaluru in early 2026. CEO Dario Amadei’s recent visit to meet with government officials signals a focus on developing real-world AI applications in Indian education, healthcare, and agriculture.

2. Innovations in AI Models and Platforms

Leading AI labs are releasing a flurry of updates that enhance model capabilities, improve user accessibility, and introduce novel functionalities across various domains.

Google’s Coordinated Releases

Google announced four major updates simultaneously, demonstrating a multi-faceted approach to AI development.

| Category | Development | Details |

| Quantum Computing | Willow Quantum Chip | Solved a quantum computing benchmark 13,000 times faster than conventional supercomputers using a verifiable algorithm called “quantum echoes.” This has significant implications for complex problems like drug discovery. |

| Healthcare | Gemma 2-Based Cancer Research | In collaboration with Yale and DeepMind, a new AI model discovered a drug combination that, in lab tests on human cells, made tumors 50% more visible to the immune system. |

| Robotics | Gemini Robotics 1.5 | Introduces “thinking via,” an internal monologue in natural language that allows robots to reason through multi-step tasks like folding origami or preparing a salad before taking action. |

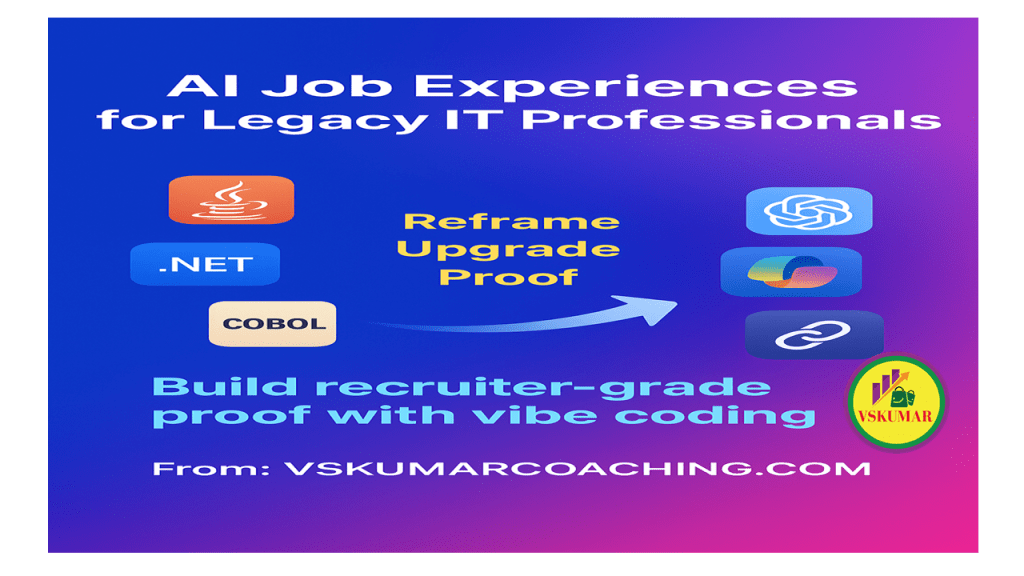

| Developer Tools | Vibe Coding in AI Studio | A drag-and-drop application creation tool. Features include “annotation mode” for making changes via voice commands and an “I’m feeling lucky” button for generating app ideas. |

| User Experience | Notebook LM Visual Styles | The note-taking tool can now transform study notes into narrated videos with six new visual styles, including anime, watercolor, and papercraft. The AI generates contextual illustrations that match the content. |

Anthropic’s Claude Ecosystem Expansion

Anthropic has focused on deeply integrating its Claude model into user workflows while making its technology more accessible and powerful.

- Desktop Integration: Claude is now available as a desktop application for Windows and Mac, allowing users to access it with a keyboard shortcut without switching context.

- Desktop Extensions & Skills: Using a “Model Context Protocol,” the desktop app can connect to local files, databases, and code. “Claude Skills” are custom, reusable folders of instructions and scripts that Claude can automatically load to perform specialized tasks, creating an AI that remembers a user’s specific workflows.

- Cloud-Based Coding: Users can now assign coding tasks to Claude, which it will execute in the cloud, even from a mobile phone (currently iOS-only).

- Claude Haiku 4.5: This new model reportedly matches the performance of Anthropic’s previous top-tier model but is three times cheaper and runs twice as fast. It is the first Haiku model to feature “extended thinking,” enabling it to solve complex problems more efficiently.

OpenAI’s Push Towards Action-Oriented AI

OpenAI is evolving ChatGPT from a conversational tool into an agent capable of performing tasks on behalf of the user.

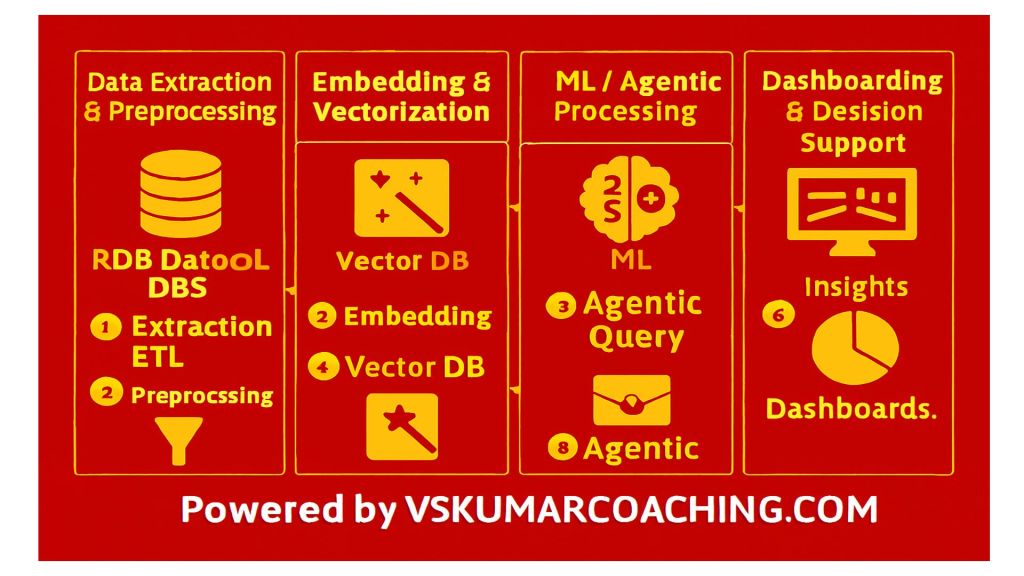

- Atlas Web Agent: OpenAI launched Atlas, an AI designed to browse the web and take actions such as booking flights, conducting research, clicking buttons, and filling out forms.

- Acquisition of Sky: OpenAI acquired Software Applications Incorporated, the company behind the Mac application Sky. Sky is an AI assistant that can see what is on a user’s screen and interact with it by clicking buttons. The team, which previously created Workflow (the precursor to Apple’s Shortcuts), will integrate this technology into ChatGPT.

Emerging Challengers

New and specialized models are entering the market, challenging established players in unique ways.

- xAI’s Grokipedia: Elon Musk launched an AI-powered encyclopedia intended to rival Wikipedia, with the stated goal of delivering “the whole truth.” It launched with 885,000 articles, compared to Wikipedia’s 7 million. The platform is controversial; while supporters praise its unfiltered approach to sensitive topics, critics allege bias, poor search functionality, and content heavily derived from Wikipedia without proper citation.

- Alibaba’s Qwen 3VL: Alibaba released a small but powerful vision-language model in 2-billion and 32-billion parameter versions. The 32B model has shown superior performance to larger models like GPT-5 mini and Claude 4 Sonnet in specific benchmarks for science problems, video understanding, and agentic tasks.

3. AI in the Physical and Cognitive Worlds

Advancements are extending beyond software, with AI now being integrated into advanced robotics and directly with the human brain.

Humanoid Robotics: Figure 03

The robotics company Figure AI has made significant strides toward creating a commercially viable humanoid robot for domestic use.

- Design for Mass Manufacturing: Figure 03, featured on the cover of Time magazine’s Best Inventions of 2025, is engineered for mass production. The company has built a factory (“Bot-Q”) to manufacture 12,000 units this year, scaling to 100,000 over four years.

- Hardware and AI Synergy: The robot runs on Helix AI, a proprietary vision-language-action system. Its hardware is purpose-built to support this AI, featuring cameras with double the frame rate and a 60% wider field of view than its predecessor, embedded palm cameras for work in confined spaces, and fingertip sensors that can detect forces as small as 3 grams (the weight of a paperclip).

- Human-Centric Design: The robot is 9% lighter than Figure 02, covered in soft, washable textiles, and features wireless charging via coils in its feet.

- Data Collection Methodology: Figure is employing human pilots in VR headsets to perform household tasks, generating massive training datasets for Helix AI by learning from every success and failure.

Neuro-adaptive Technology: MIT’s Neurohat

Researchers at the MIT Media Lab have created the first system to directly integrate a large language model with real-time brain data.

- Brain-Computer Interface: The “Neurohat” is a headband that continuously monitors a user’s brain activity to calculate an “engagement index,” determining if the user is focused, overloaded, or bored.

- Adaptive Conversation: Based on this index, the integrated LLM (GPT-4) automatically adjusts its conversational style—altering the complexity, tone, and pacing of information. It presents more challenging material when the user is engaged and simplifies explanations when focus dips.

- Pilot Study Findings: A preliminary study confirmed that Neurohat significantly increased both measured and self-reported user engagement. However, it did not produce an immediate improvement in short-term learning outcomes, such as quiz scores, suggesting it simplifies the learning process rather than making users “smarter overnight.”

4. Critical Challenges and Societal Implications

The rapid deployment of AI technologies brings to light profound ethical questions and concerns about safety and the future of human labor.

The Perils of Automation without Oversight

A recent event in Baltimore serves as a stark warning about the risks of deploying AI in critical, real-world scenarios without robust human verification.

- The “Doritos Gun” Case Study: A 16-year-old was apprehended by eight police cars with guns drawn after an AI gun detection system from the company Omnilert misidentified his crumpled Doritos bag as a firearm. The incident highlights the failure of the human systems responsible for verifying the AI’s alert before initiating a high-stakes police response, turning an algorithm’s error into a traumatic event.

The Future of Work: Augmentation vs. Automation

The discourse around AI’s economic impact is crystallizing around a central question: whether humans will use AI to become more capable or be replaced by it.

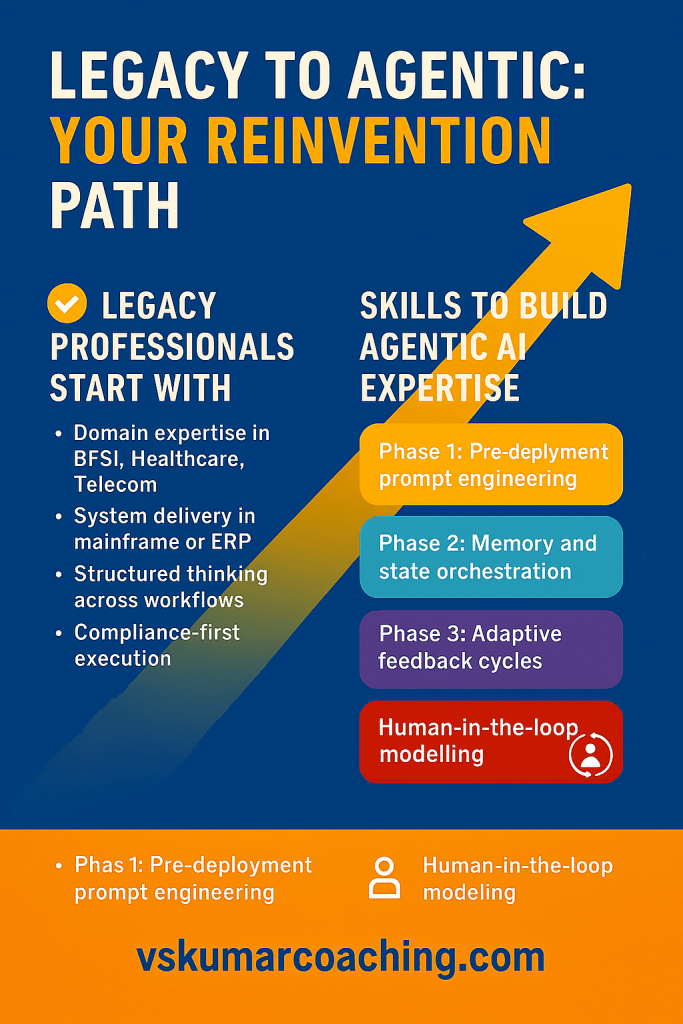

- Replaceable or Indispensable: Insights from Anthropic’s upcoming economic index report frame the critical choice facing the workforce. It questions whether individuals are using AI merely to get answers faster or to become “fundamentally smarter” and augment themselves into “irreplaceable” positions.

- Business Automation Trends: The report notes that 77% of global businesses are already deploying AI to automate complete tasks, targeting not just simple, low-cost work but also “high-value, complex work.” This indicates a widening gap between those who leverage AI for augmentation and those whose roles are being automated.