Below are 10 interview-grade questions with detailed, practical answers designed to help professionals upgrade into AI roles ASAP, directly grounded in the 7 irreplaceable AI-age skills you shared.

These are suitable for AI Engineer, AI Product Manager, AI Consultant, GenAI DevOps, AI Business Analyst, and AI Coach roles.

1. Why is problem framing more important than prompt engineering when moving into AI roles?

Answer:

Problem framing is the foundation of every successful AI solution. Before writing prompts or selecting models, professionals must clearly define what problem is being solved, for whom, and how success will be measured. Poorly framed problems lead to impressive but useless AI outputs.

In AI roles, the value you bring is not model access but clarity of intent. AI tools can generate answers endlessly, but they cannot determine business relevance. This is why the World Economic Forum ranks analytical thinking and problem framing as the top skill through 2030.

For example, instead of asking an AI, “Improve this dashboard”, a strong AI professional reframes it as:

“Create a decision-focused dashboard for CXOs that highlights revenue leakage risks within 30 seconds of viewing.”

This clarity turns AI from a chatbot into a decision engine, which is what organizations pay for.

2. How does AI literacy differ from basic tool usage, and why does it accelerate career growth?

Answer:

AI literacy goes beyond knowing how to use ChatGPT or Copilot. It includes understanding model strengths, limitations, hallucination risks, token behavior, context windows, and grounding techniques.

AI-literate professionals know:

- When to use LLMs vs rules vs automation

- How to structure prompts for accuracy and reuse

- How to combine AI with human judgment

This is why LinkedIn lists AI literacy as the fastest-growing skill in 2025 and why AI-skilled roles pay ~28% more. Companies reward professionals who reduce AI risk while increasing AI output, not those who just generate text.

3. What does “workflow orchestration” mean in real-world AI jobs?

Answer:

Workflow orchestration means designing chains of AI agents and tools that work together like a digital team. Instead of one AI doing everything, tasks are broken into roles—researcher, reviewer, strategist, executor.

For example:

- Claude → Product Manager (requirements)

- ChatGPT → Technical Designer

- Gemini → Compliance & Bias Review

- Automation → Deployment or Reporting

This allows one professional to deliver the output of a 5–10 person team, which is why founders and enterprises value this skill heavily. AI roles increasingly reward system thinkers, not individual task executors.

4. Why is verification and critical thinking a non-negotiable AI skill?

Answer:

AI systems are often confidently wrong. Even enterprise-grade tools with citations can hallucinate or misinterpret data. In AI roles, your responsibility shifts from producing content to validating truth, bias, and risk.

Strong verification habits include:

- Cross-checking answers across multiple models

- Asking AI to self-rate confidence and assumptions

- Reviewing outputs for bias, missing context, or legal risk

This skill protects organizations from compliance failures, reputational damage, and costly mistakes—making you indispensable, even as AI improves.

5. How does creative thinking differentiate humans from AI in professional settings?

Answer:

AI excels at generating options; humans excel at choosing meaning. Creative thinking involves selecting what matters, connecting unrelated ideas, and designing emotional resonance.

In AI roles:

- AI drafts content

- Humans define narrative, insight, and originality

This “last 20%” is where differentiation happens. According to the World Economic Forum, demand for creative thinking will grow faster than analytical thinking, because creativity converts AI output into business impact.

6. What is repurposing and synthesis, and why is it called “unfair leverage”?

Answer:

Repurposing is the ability to take one strong idea and convert it into multiple formats—blogs, reels, emails, decks, training modules—using AI.

For example:

- One AI-assisted webinar →

10 LinkedIn posts,

5 short videos,

1 email sequence,

1 sales page.

AI roles increasingly value professionals who maximize reach with minimal effort, not those who keep recreating from scratch. This skill compounds visibility, authority, and income.

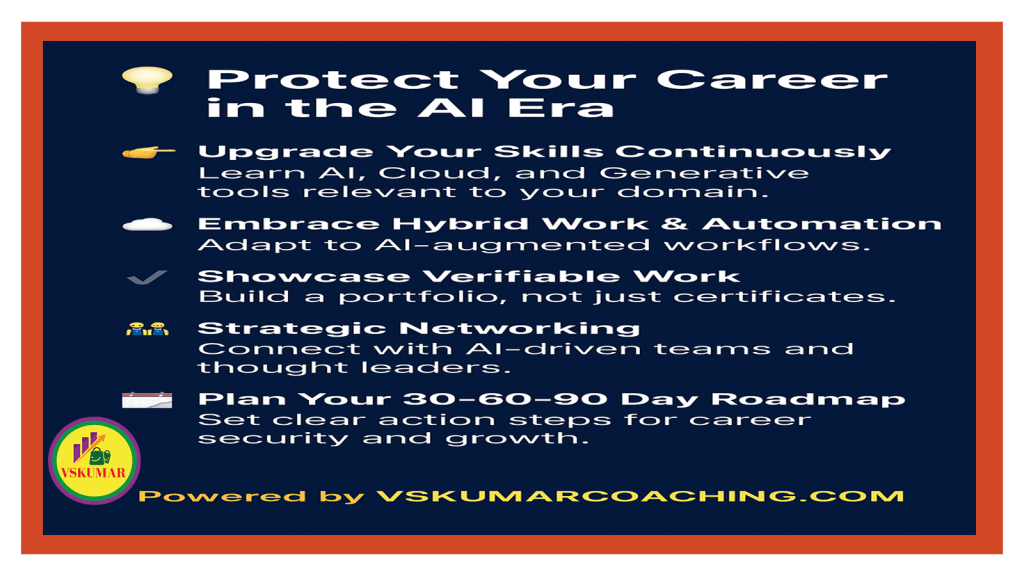

7. How does continuous learning protect AI professionals from obsolescence?

Answer:

By 2030, 39% of current skills will be outdated. Continuous learning is the meta-skill that ensures relevance despite rapid AI evolution.

AI professionals must:

- Learn from first principles

- Rebuild skills as tools change

- Avoid over-reliance on automation

Ironically, as AI makes things easier, discipline becomes more valuable. Those who maintain the ability to struggle, learn, and adapt will outpace those who rely blindly on tools.

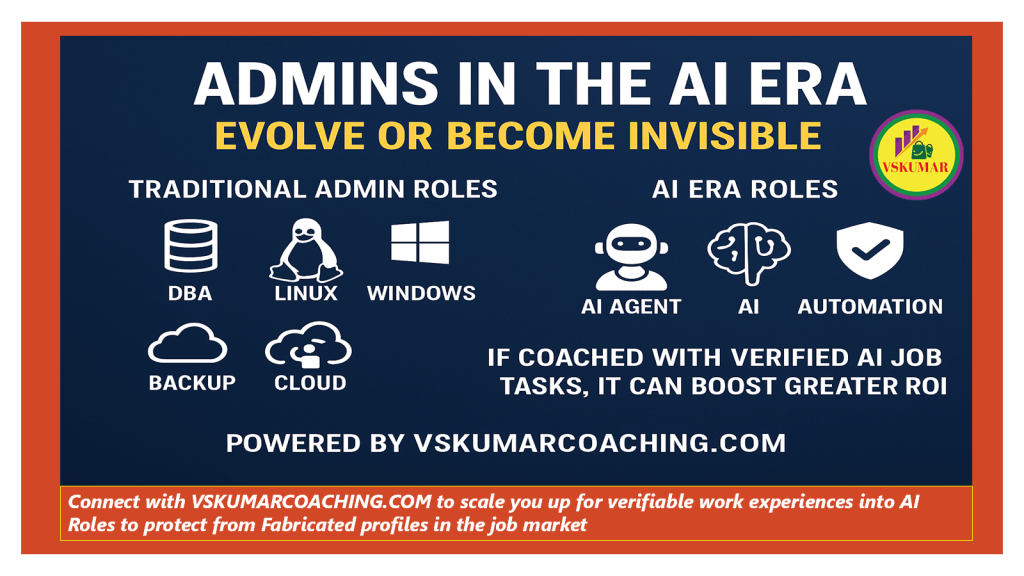

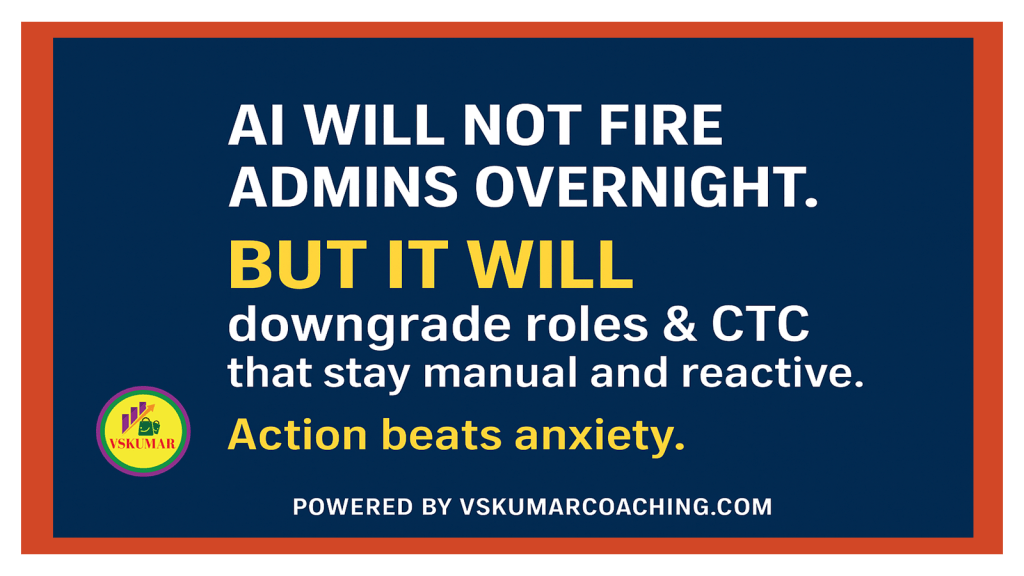

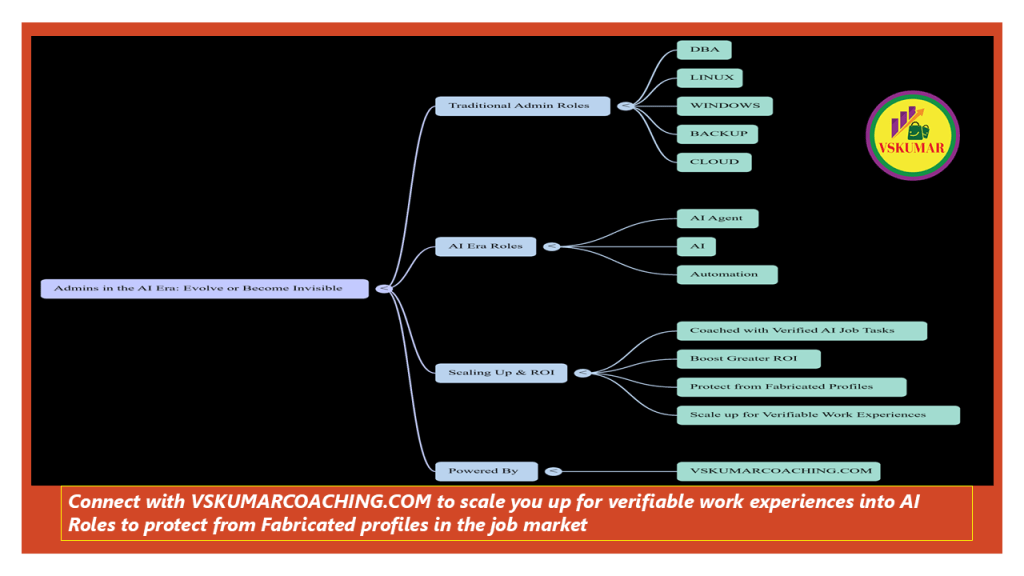

8. How should professionals transition from traditional IT roles into AI roles quickly?

Answer:

The fastest transition path is:

- Keep your domain expertise (DevOps, QA, Finance, HR, Ops)

- Layer AI skills on top (problem framing, workflows, verification)

- Position yourself as an AI-enabled domain expert

AI does not replace specialists—it amplifies them. A DevOps engineer who understands AI workflows is far more valuable than a generic AI beginner.

9. What mindset shift is required to become “AI-irreplaceable”?

Answer:

The key shift is moving from:

“I do tasks”

to

“I design outcomes using AI systems”

Irreplaceable professionals focus on:

- Decision quality

- Risk reduction

- Speed + accuracy

- Business relevance

They treat AI as a force multiplier, not a crutch.

10. What is the biggest mistake professionals make when adopting AI?

Answer:

The biggest mistake is tool obsession without thinking depth. Many jump into prompts without understanding the problem, audience, or success criteria.

AI rewards clarity, not curiosity alone. Professionals who slow down to frame, verify, and synthesize outperform those who chase every new tool.

The future belongs to those who think better with AI, not those who simply use it.