Azure Data Factory (ADF) is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. To ensure secure, scalable, and maintainable pipelines, Azure enforces a role-based access control (RBAC) model. Role assignments restrict who can create, modify, delete, or monitor ADF resources, safeguarding production workloads and enforcing separation of duties. In this article, we explore the built-in and custom roles for ADF, discuss how to assign roles at various scopes, and illustrate best practices for controlling access in development, test, and production environments.

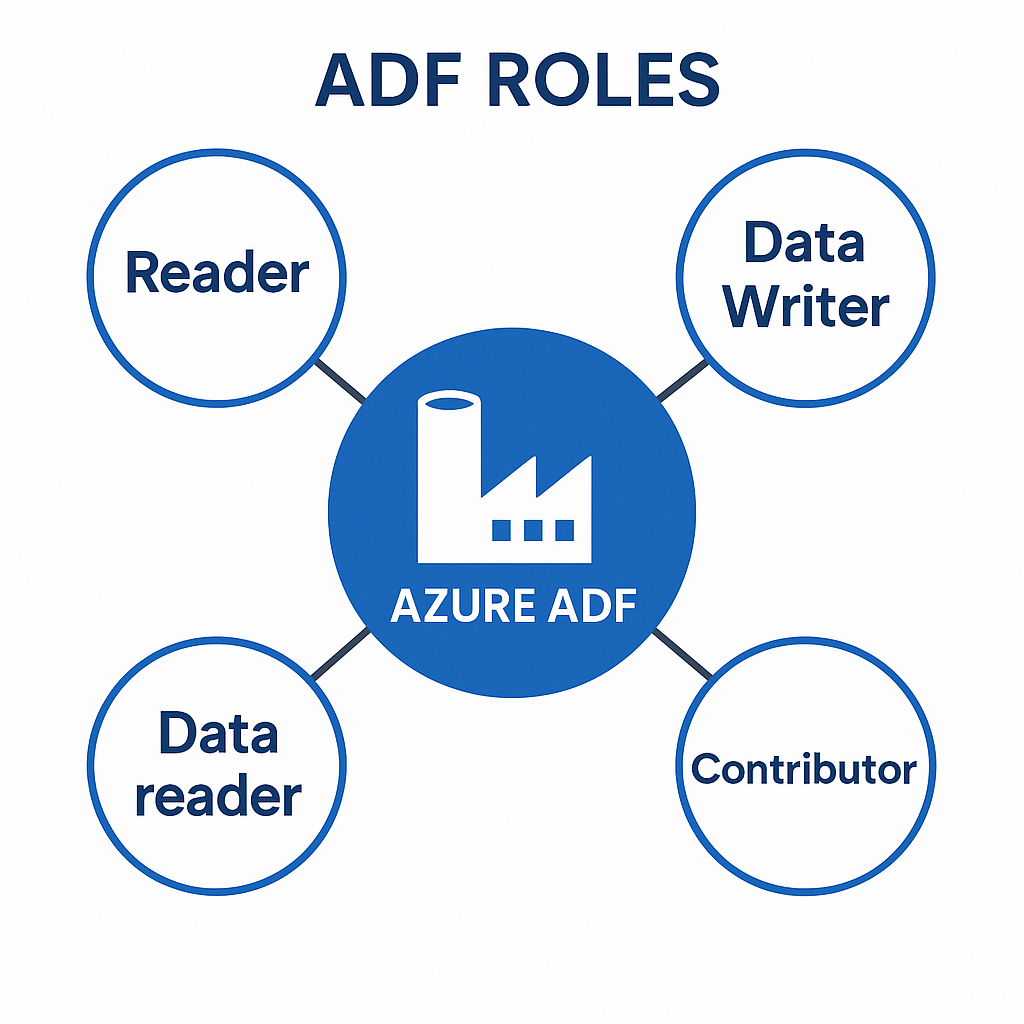

Built-In Azure RBAC Roles for ADF

Azure provides a set of built-in roles that grant coarse-grained permissions over ADF instances and their child resources (datasets, linked services, pipelines, triggers, integration runtimes). The most relevant roles include:

- Owner

Grants full authority over all resources, including the ability to delegate roles in Azure RBAC. This role is typically reserved for subscription administrators and key stakeholders who must manage billing, policy, and governance settings across all resources.(Azure RBAC overview) - Contributor

Permits create, update, and delete actions on all resource types, but does not allow assigning roles. It is a superset of the Data Factory Contributor role, so users with Contributor at resource or resource-group scope can manage ADF child resources without an explicit Data Factory Contributor assignment.(Roles and permissions for Azure Data Factory) - Reader

Provides read-only access to view resource properties, but cannot modify or delete. Ideal for auditors or stakeholders who need visibility without the risk of configuration changes.(Azure built-in roles) - Data Factory Contributor

ADF’s specialized role that allows creation, editing, and deletion of a data factory and its child resources via the Azure portal. Members of this role can deploy Resource Manager templates for pipelines and integration runtimes, manage Application Insights alerts, and open support tickets. This role does not permit creating non-ADF resources.(Roles and permissions for Azure Data Factory)

Assigning Roles at Different Scopes

Azure RBAC assignments target three main scopes:

- Subscription: Broadest scope. Granting a role here applies to all resource groups and resources within the subscription. Suitable for enterprise teams managing multiple data factories across environments.

- Resource Group: Applies the role to all resources in that group. Use this scope for environment-specific assignments (e.g., a group containing dev or test ADF instances).

- Resource: Narrowest scope. Assign roles on a single data factory to isolate permissions to just that instance.

For example, to let a user work with any ADF in a subscription, assign the Data Factory Contributor role at the subscription scope. To limit a user to just one factory, assign Contributor (or a custom role) at the resource scope.(Roles and permissions for Azure Data Factory)

Custom Roles and Fine-Grained Scenarios

Built-in roles may not satisfy every organizational scenario. Azure supports custom roles that specify exact actions (Actions), exclusions (NotActions), data-plane operations (DataActions), and assignable scopes. Custom roles enable:

- Limited Portal vs. SDK Access

You may allow a service principal to update pipelines via PowerShell or SDK, but prevent portal publishing. A custom role can omit theMicrosoft.DataFactory/factories/writepermission in “Live” mode while retaining data-plane actions.(Roles and permissions for Azure Data Factory) - Read-Only Monitoring

Operators can view pipeline runs, metrics, and integration runtime health but cannot alter configurations. Assign the built-in Reader role at the factory scope or craft a custom role with only read and runtime-control actions (pause/resume, cancel).(Roles and permissions for Azure Data Factory) - Developer vs. Data vs. Admin Personas

Separate duties so developers build pipelines and dataset definitions, data engineers curate datasets and linked services, and administrators manage repository settings, global parameters, and linked service credentials. Use custom roles to grant only the necessary Microsoft.DataFactory action sets to each persona.(Using Azure Custom Roles to Secure your Azure Data Factory Resources)

Common ADF Personas and Their Permissions

Defining personas aligns business processes with access control, ensuring least-privilege. Typical roles include:

Operator

Monitors pipeline executions, triggers runs, and restarts failed activities. A custom “Data Factory Operator” role might include read actions on factory resources plus pipeline pause/resume and run/cancel actions, without design-time permissions.(Using Azure Custom Roles to Secure your Azure Data Factory Resources)

Developer

Designs and debugs pipelines, activities, and data flows. Needs write access to pipeline, dataset, data flow, and integration runtime definitions but not to linked service credentials or repository settings. Grant the built-in Data Factory Contributor role at the factory scope in dev environments, or create a custom role restricting linked service actions in production.(Roles and permissions for Azure Data Factory)

Data Engineer

Manages datasets and schema configurations. A data-oriented role can include Microsoft.DataFactory/factories/datasets/* actions and getDataPlaneAccess for previewing data, while excluding triggers and pipeline actions.(Roles and permissions for Azure Data Factory)

Administrator

Controls ADF instance settings, Git integration, global parameters, security, and linked service credentials. This persona requires the Contributor role at the factory scope (or higher) and might also need Key Vault contributor rights to manage secrets used by ADF.(Using Azure Custom Roles to Secure your Azure Data Factory Resources)

Implementing Custom Roles: An Example

Below is a conceptual outline (not a code block) of a “Data Factory Operator” custom role definition, demonstrating how to include only runtime-control and read actions:

– Actions:

• Microsoft.Authorization//read • Microsoft.Resources/subscriptions/resourceGroups/read • Microsoft.DataFactory/datafactories//read

• Microsoft.DataFactory/factories/datapipelines/pause/action

• Microsoft.DataFactory/factories/datapipelines/resume/action

• Microsoft.DataFactory/factories/pipelineruns/cancel/action

• Microsoft.DataFactory/factories/pipelines/createrun/action

• Microsoft.DataFactory/factories/triggers/start/action

• Microsoft.DataFactory/factories/triggers/stop/action

• Microsoft.DataFactory/factories/getDataPlaneAccess/action

– NotActions: []

– AssignableScopes: [ scope of your choice ]

(Using Azure Custom Roles to Secure your Azure Data Factory Resources)

The assignable scope can target a subscription, resource group, or single data factory. Collaborative workstreams can leverage multiple custom roles assigned at different scopes to achieve separation of development, test, and production duties.

Step-By-Step: Assigning the Data Factory Contributor Role

- Sign in to the Azure portal and open your Data Factory resource.

- In the left menu, select Access control (IAM).

- Click Add role assignment, and choose Data Factory Contributor from the list.

- In the Members tab, select the users, groups, or service principals you wish to authorize.

- Confirm and save. The assignees can now create and manage pipelines, datasets, linked services, triggers, and integration runtimes in that Data Factory.(How to set permissions in ADF ?)

Integrating Key Vault Permissions for Linked Services

Linked services often require secrets (connection strings, passwords). To avoid embedding credentials in ADF definitions:

- Store secrets in Azure Key Vault and enable Managed Identity authentication for ADF.

- Grant the Data Factory’s managed identity access to Key Vault—typically the Key Vault Reader or Key Vault Secrets User role at the vault scope.

- Configure your linked service in ADF to reference Key Vault secrets.

This pattern keeps credentials out of code and YAML, and relies on RBAC for vault access. Administrators responsible for Key Vault management may also leverage custom roles or predefined Key Vault roles like Key Vault Contributor and Key Vault Administrator.(Azure data security and encryption best practices)

Managing DevOps Pipelines with Role Assignments

In environments where ADF artifacts are developed via Git—Azure Repos or GitHub—the build and release pipelines require only service-principal or managed-identity access to execute deployments:

- Build stage: Validate ARM templates and unit tests with read-only Data Factory permissions.

- Release stage: Use a service principal with Contributor (or Data Factory Contributor) at the resource group scope to deploy.

Avoid granting developer Git contributors elevated RBAC permissions that could inadvertently publish changes. RBAC for deployment and Git permissions remain distinct: a user with repo write access but only Reader role cannot publish to production ADF.(Roles and permissions for Azure Data Factory)

Best Practices for ADF Role Management

- Principle of Least Privilege

Assign minimal permissions required for each persona. Combine built-in and custom roles to tailor access. - Environment Segregation

Use separate subscriptions or resource groups for development, test, and production. Assign roles per environment to reduce blast radius.(Data Factory security baseline) - Conditional Access and ABAC

Leverage Azure Attribute-Based Access Control (ABAC) to enforce temporary, conditional permissions based on resource tags, time of day, or client location. - Auditing and Monitoring

Enable Azure Monitor logs, alerts for unauthorized role changes, and pipeline failures. Periodically review role assignments to detect stale permissions. - Automated Role Assignment

Incorporate role assignments into Infrastructure as Code (ARM templates or Terraform) for repeatable, auditable deployments. - Secure Privileged Access Workstations

Require subscription administrators to use hardened workstations for RBAC changes and Key Vault operations to minimize endpoint risk.(Azure data security and encryption best practices) - Key Vault Integration

Use Managed Identities and RBAC for secure secret management rather than business or system credentials in code or pipeline definitions.

Conclusion

Role-based access control in Azure Data Factory ensures that teams can collaborate without risking unauthorized changes or data leaks. By combining built-in roles like Data Factory Contributor and Reader with custom roles tailored to operator, developer, data, and administrator personas, organizations can achieve granular, least-privilege access across development, test, and production environments. Integrating ADF with Azure Key Vault for secret management, using Managed Identities for data-plane access, and enforcing ABAC conditions further strengthens your security posture. Finally, embedding RBAC assignments into your DevOps pipelines and regularly auditing permissions ensures that your data integration workflows remain both agile and secure.

Can you prepare 30 interview questions on the ADF Process

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service that orchestrates and automates the movement and transformation of data at scale. As enterprises embrace hybrid and multi-cloud architectures, proficiency in ADF has become a core competence for data engineers, analytics professionals, and architects. The following 30 interview questions delve into ADF’s process concepts—from core components and integration runtimes to advanced deployment, security, monitoring, and optimization scenarios. Each question is paired with a thorough answer to help candidates demonstrate both theoretical understanding and practical expertise.

- Why is Azure Data Factory necessary in modern data architectures?

Azure Data Factory enables the creation of code-free, scalable ETL (Extract-Transform-Load) and ELT (Extract-Load-Transform) pipelines that span on-premises and cloud data stores. It abstracts infrastructure management by providing serverless orchestration, built-in connectors to 90+ data services, and native support for data transformation using Mapping Data Flows and Azure Databricks. This reduces time-to-insight and operational complexity compared to custom scripts or legacy ETL tools.(K21 Academy) - What are the primary components of an ADF process, and how do they interact?

The main components include:

• Pipelines: Logical groupings of activities that perform data movement or transformation.

• Activities: Steps within a pipeline (Copy, Data Flow, Lookup, Web, etc.).

• Datasets: Metadata definitions pointing to data structures (tables, files) in linked stores.

• Linked Services: Connection strings and authentication for external data stores or compute environments.

• Integration Runtimes (IR): Compute infrastructure enabling data flow execution (Azure IR, Self-hosted IR, Azure-SSIS IR).

• Triggers: Schedules, event-based, or tumbling-window mechanisms to launch pipelines automatically.

Together, these components orchestrate end-to-end data workflows across diverse sources.(DataCamp) - How does Integration Runtime (IR) differ across its three types?

• Azure Integration Runtime: A Microsoft-managed, serverless compute environment for copying data between cloud stores and dispatching transformation tasks to Azure services.

• Self-Hosted Integration Runtime: Customer-installed runtime on on-premises machines or VMs, enabling secure hybrid data movement without public internet exposure.

• Azure-SSIS Integration Runtime: A dedicated IR for lift-and-shift execution of SQL Server Integration Services (SSIS) packages in Azure, supporting existing SSIS workloads with minimal code changes.(K21 Academy) - Describe the difference between ETL and ELT paradigms in the context of ADF.

In ETL, data is Extracted from source systems, Transformed on a dedicated compute engine (e.g., Data Flow, SSIS), and then Loaded into the destination for consumption. ELT reverses the last two steps: data is Extracted and Loaded into a destination (such as Azure Synapse or Azure SQL Database) where transformations occur using the destination’s compute power. ADF supports both paradigms, allowing transformation either in-pipeline (Mapping Data Flows or Compute services) or post-load in the target system.(ProjectPro) - What is a Mapping Data Flow, and when would you use it?

A Mapping Data Flow is a visual, code-free ETL/ELT feature in ADF that leverages Spark under the hood to perform scalable data transformations (filter, join, aggregate, window, pivot, etc.). It’s ideal for complex transformations on large datasets without writing custom Spark code. You author transformations graphically and ADF handles Spark cluster provisioning and execution.(K21 Academy) - Explain how you would implement incremental data loads in ADF.

Use a watermark column (e.g., LastModifiedDate) to track the highest processed timestamp. Store the last watermark in a control table or metadata store. In the pipeline’s source dataset, parameterize a query to filter rows greater than the stored watermark. After a successful load, update the watermark value. This ensures only new or changed records are ingested each run, minimizing data movement.(Medium) - How do tumorbing window triggers differ from schedule and event-based triggers?

• Schedule Trigger: Executes pipelines at specified wall-clock times or recurrence intervals.

• Event-Based Trigger: Launches pipelines in response to resource events (e.g., Blob creation or deletion).

• Tumbling Window Trigger: Partitions execution into contiguous, non-overlapping time windows. It maintains state for each window and can retry failed windows without affecting others, making it well-suited for time-series processing and backfill scenarios.(K21 Academy) - What strategies would you use to secure sensitive credentials and connection strings in ADF?

• Store secrets in Azure Key Vault and reference them via Linked Service parameters with Managed Identity authentication.

• Enable Managed Virtual Network and Private Endpoints to keep data traffic within the Azure backbone.

• Use ADF’s Role-Based Access Control (RBAC) integrated with Azure Active Directory to restrict factory-level and resource-level permissions.

• Employ system-assigned or user-assigned Managed Identities to allow ADF to authenticate to Azure resources without embedded credentials.(DataCamp) - How can you monitor, alert, and debug pipelines in ADF?

• Monitor tab in the Azure portal: View pipeline runs, activity runs, durations, and failure details.

• Azure Monitor integration: Send metrics and logs to Log Analytics, set up alerts on failure counts, latency, or custom metrics.

• Activity Retry Policies: Configure retry count and intervals in activity settings to auto-recover from transient failures.

• Debug mode: Test pipelines interactively in the authoring canvas, with on-screen details and data previews for Mapping Data Flows.

• Output and error logs: Inspect JSON error messages, stack traces, and diagnostic details directly in the portal or Log Analytics.(DataCamp) - Describe a scenario where you would use a Lookup activity versus a Get Metadata activity.

• Lookup Activity: Retrieves data (up to 5 MB) from a table or file based on a query or path. Use it to fetch configuration values, filenames, or control records for dynamic pipeline logic.

• Get Metadata Activity: Fetches metadata properties of a dataset (child items, size, existence). Use it to check if files exist, list folder contents, or drive ForEach loops based on the number of child elements.(ProjectPro) - How do you implement branching and looping in ADF pipelines?

• If Condition Activity: Evaluates an expression to execute one of two branches (true/false).

• Switch Activity: Routes execution based on matching expressions against multiple cases.

• ForEach Activity: Iterates over an array of items (e.g., filenames or lookup results) and runs a nested set of activities for each element.

• Until Activity: Repeats activities until a specified condition evaluates to true, useful for polling external systems until data is ready.(K21 Academy) - What are custom activities, and when would you use them?

Custom activities allow you to run custom code (C#, Python, etc.) in an Azure Batch pool as part of an ADF pipeline. Use them when built-in activities or mapping data flows cannot cater to specialized algorithms or SDKs. Examples include calling proprietary libraries, performing model inference, or complex graph algorithms not natively supported.(DataCamp) - How can you share a Self-Hosted Integration Runtime across multiple data factories?

- Enable “Grant Permissions” during IR creation in the source Data Factory and specify target factories.

- In the target Data Factory, create a new Linked Integration Runtime and provide the Resource ID of the shared IR.

- Configure access controls to ensure the shared IR can execute jobs on behalf of the target factories.(Medium)

- Discuss best practices for deploying ADF pipelines across dev, test, and prod environments.

• Use Git integration (Azure DevOps or GitHub) for source control, branching, and pull requests.

• Parameterize linked services, datasets, and pipelines to externalize environment-specific values.

• Implement Azure DevOps pipelines or GitHub Actions to automatically validate ARM templates, run integration tests, and deploy factories via ARM or PowerShell.

• Employ naming conventions and folders to organize pipelines logically.

• Secure secrets in Key Vault and reference via vault references in all environments.(ProjectPro) - How would you optimize performance when copying very large datasets?

• Use PolyBase or Bulk Insert options when loading into Azure Synapse or SQL Data Warehouse.

• Adjust Copy activity’s Parallel Copies and Data Integration Units (DIUs) to scale throughput.

• Leverage staging in Azure Blob Storage or Azure Data Lake Storage to optimize network performance.

• Compress data in transit with GZip or Deflate.

• Partition source data and use multiple Copy activities in parallel for partitioned workloads.(K21 Academy) - Explain how you would handle schema drift in Mapping Data Flows.

Schema drift occurs when source data schema changes over time. In Mapping Data Flows, enable “Allow schema drift” in the source settings. Use the “Auto Mapping” feature to automatically map new columns. Use “Select” or “Derived Column” transformations to handle renamed or newly added fields dynamically.(DataCamp) - How can you implement data lineage and audit logging in ADF?

• Enable diagnostic settings to send pipeline and activity run logs to Log Analytics, Event Hubs, or Storage Accounts.

• Use Azure Purview integration to automatically capture data lineage and impact analysis across ADF pipelines, datasets, and linked services.

• Incorporate custom logging within pipelines (e.g., Web activity calling an Azure Function) to record business-level lineage or audit events.(DataCamp) - What is the role of Azure Key Vault in ADF, and how do you integrate it?

Azure Key Vault centrally stores secrets, certificates, and keys. In ADF Linked Services, specify the Key Vault reference URI as the connection’s credential. Grant the ADF Managed Identity access policies (Get, List) on the vault. ADF retrieves secrets at runtime without exposing them in the factory JSON or pipelines.(K21 Academy) - Describe how to migrate existing SSIS packages to ADF.

- Deploy SSIS packages to an Azure-SSIS Integration Runtime in ADF.

- Configure SSISDB catalog in Azure SQL Database (or Managed Instance).

- Use Microsoft’s SSIS Migration Wizard or Azure Data Factory Migration Utility to automate migration.

- Validate package execution, update connection managers to point to cloud data sources, and optimize performance with Scale-Out workers if needed.(K21 Academy)

- How do you parameterize pipelines and datasets for dynamic execution?

• Define pipeline parameters in the pipeline’s JSON schema.

• Use these parameters to set values for dataset properties (file paths, table names), linked service connection strings, and activity settings.

• Pass parameter values during pipeline invocation via UI, REST API, PowerShell, or triggers.

• This enables reusability of pipeline logic across multiple environments or scenarios.(ProjectPro) - What techniques can you use to enforce data quality in ADF processes?

• Use Mapping Data Flow to implement data validation rules (null checks, range checks, pattern matching) and route invalid records to separate sinks.

• Integrate with Azure Data Quality Services or third-party libraries in custom activities.

• Implement pre- and post-load checks using Lookup or Stored Procedure activities to validate record counts, checksums, or referential constraints.

• Configure alerts in Azure Monitor for data anomalies or threshold breaches.(DataCamp) - How can you call an Azure Function or Databricks notebook from ADF?

• Use the Web Activity to invoke Azure Functions or REST APIs. Provide the function URL and necessary headers.

• Use the Databricks Notebook Activity to run notebooks in Azure Databricks clusters. Specify workspace URL, cluster ID, notebook path, access token, and parameters.

• Use the Azure Batch or Custom Activity for more advanced orchestration scenarios.(DataCamp) - Explain how you would implement a fan-out/fan-in pattern in ADF.

• Fan-Out: Use a Lookup or Get Metadata activity to return an array of items (e.g., file names).

• Pass this array to a ForEach activity, which spawns parallel execution branches (Copy or Data Flow activities) for each item.

• Fan-In: After all parallel branches complete, use an aggregate or Union transformation in a Mapping Data Flow, or a final Stored Procedure activity to consolidate results into a single sink.(Medium) - How do you manage versioning and rollback of ADF pipelines?

• Store factory code in Git (Azure DevOps or GitHub) with branches for feature development and release.

• Use pull requests to review changes and merge to the main branch.

• Trigger CI/CD pipelines to deploy specific commit hashes or tags to target environments.

• If an issue arises, revert the merge or deploy a previous tag to rollback the factory to a known good state.(ProjectPro) - What is the difference between Copy Activity and Data Flow Activity?

• Copy Activity: High-performance data movement between stores, with optional basic transformations (column mapping, compression). Ideal for bulk data transfer.

• Data Flow Activity: Runs Mapping Data Flows on Spark clusters for complex transformations (joins, lookups, aggregations, pivot/unpivot) with code-free authoring. Suitable for compute-intensive ETL/ELT tasks.(K21 Academy) - How would you implement real-time or near real-time data processing in ADF?

While ADF is inherently batch-oriented, you can approximate near real-time by:

• Using Event-Based Triggers on Azure Blob or Event Hubs to invoke pipelines within seconds of data arrival.

• Integrating Azure Stream Analytics or Azure Functions for stream processing, then using ADF to orchestrate downstream enrichment or storage.

• Employing small tumbling window intervals (e.g., 1-minute windows) for frequent batch jobs.(Medium) - Describe how you can call one pipeline from another and why this is useful.

Use the Execute Pipeline activity to invoke a child pipeline within a parent pipeline. This promotes modular design, code reuse, and separation of concerns (e.g., dedicated pipelines for staging, transformation, and loading). You can also pass parameters between pipelines to customize child behavior.(K21 Academy) - What are Data Flow Debug sessions, and how do they help development?

Data Flow Debug sessions spin up an interactive Spark cluster for real-time testing of Mapping Data Flows. This allows data preview at each transformation step, rapid iteration without pipeline runs, and immediate insight into schema and data drift issues, greatly accelerating development and troubleshooting.(DataCamp) - How do you ensure idempotency in ADF pipelines?

Idempotent pipelines produce the same result regardless of how many times they run. Techniques include:

• Using upsert or merge logic in Copy or Mapping Data Flows to avoid duplicate rows.

• Truncating or archiving target tables before load when full reloads are acceptable.

• Tracking processed records in control tables and filtering new runs accordingly.

• Designing pipelines to handle retries and restarts gracefully via checkpoints (tumbling windows) or watermarking.(ProjectPro) - What considerations would you make when designing a highly available and scalable ADF solution?

• Global scale: Use geo-redundant storage (RA-GRS), multiple regional factories, and Azure Front Door for regional failover if compliance demands.

• Integration Runtime scaling: Configure auto scale-out for Azure-SSIS IR, use multiple Self-Hosted IR nodes for load balancing, and scale DIUs for Copy activities.

• Fault tolerance: Implement retry policies, tumbling window triggers for stateful reprocessing, and circuit breakers (If Condition) to isolate faults.

• Monitoring and alerting: Centralize logs in Log Analytics, set proactive alerts, and configure Service Health notifications.

• Security: Use private link, virtual networks, Key Vault, and RBAC to meet enterprise compliance standards.

These 30 questions cover foundational concepts, development best practices, operational excellence, security, performance, and real-world scenarios. Mastering them will prepare you to articulate a comprehensive understanding of the ADF process, demonstrate hands-on experience, and design robust, scalable data integration solutions in Azure.