In continuation of my previous session on :”12. DevOps: How to build docker images using dockerfile ? ”, in this session I would like to demonstrate the exercises on:

Working with dockerfile to build apache2 container:

In this exercise, I would like to build a container with apache2 web server setup.

Finally, at the end of this exercise; you will see Apache2 web page running from firefox browser in a docker container.

Note: If you want to recollect the docker commands to be used during your current lab practice, visit my blog link:

https://vskumarblogs.wordpress.com/2017/12/13/some-useful-docker-commands-for-handling-images-and-containers/

Now, I want to create a separate directory as below:

====================>

vskumar@ubuntu:~$ pwd

/home/vskumar

vskumar@ubuntu:~$

vskumar@ubuntu:~$ mkdir apache1

vskumar@ubuntu:~$ cd apache1

vskumar@ubuntu:~/apache1$ pwd

/home/vskumar/apache1

vskumar@ubuntu:~/apache1$

====================>

To install apache2 on ubuntu16.04, let us analyze the steps as below:

Step 1: Install Apache

To install appache2 on unbuntu we can use the following commands:

sudo apt-get update sudo apt-get install apache2

We need to include the above commands in dockerfile.

Let me use the overall commands in the dockerfile as below:

======== You can see the current dockerfile, which will be used ====>

vskumar@ubuntu:~/apache1$ pwd

/home/vskumar/apache1

vskumar@ubuntu:~/apache1$ ls

dockerfile

vskumar@ubuntu:~/apache1$ cat dockerfile

FROM ubuntu:16.04

MAINTAINER “Vskumar” <vskumar35@gmail.com>

RUN apt-get update && apt-get clean

RUN apt-get -y install apache2 && apt-get clean

RUN echo “Apache running!!” >> /var/www/html/index.html

# We have used the base image of ubuntu 16.04

# update all

# cleaned all

# We have installed Apache

# We have echoed a message as Apache is running

# into index.html file

EXPOSE 80

# WE have allocated the port # 80 to apache2

vskumar@ubuntu:~/apache1$

====The above lines are from dockerfile to install apache2 in Ubuntu container ====>

So, the above dockerfile purpose is;

- It builds the container of ubuntu 16.04 with the maintainer name “vskumar”.

- It updates the current libs/packages.

- It installs the apache2.

- It sends a message

- It allocates port # 80, with EXPOSE command.

Now, let us run this build as below and review the output:

=============== Installing apache2 on ubuntu container with dockerfile =====>

vskumar@ubuntu:~/apache1$ sudo docker build -t ubuntu16.04/apache2 .

Sending build context to Docker daemon 2.048kB

Step 1/6 : FROM ubuntu:16.04

—> 20c44cd7596f

Step 2/6 : MAINTAINER “Vskumar” <vskumar35@gmail.com>

—> Running in e7c786e9d724

Removing intermediate container e7c786e9d724

—> de795f3ddd1f

Step 3/6 : RUN apt-get update && apt-get clean

—> Running in 712d867e5412

Get:1 http://security.ubuntu.com/ubuntu xenial-security InRelease [102 kB]

Get:2 http://archive.ubuntu.com/ubuntu xenial InRelease [247 kB]

Get:3 http://archive.ubuntu.com/ubuntu xenial-updates InRelease [102 kB]

Get:4 http://archive.ubuntu.com/ubuntu xenial-backports InRelease [102 kB]

Get:5 http://archive.ubuntu.com/ubuntu xenial/universe Sources [9802 kB]

Get:6 http://security.ubuntu.com/ubuntu xenial-security/universe Sources [53.1 kB]

Get:7 http://security.ubuntu.com/ubuntu xenial-security/main amd64 Packages [505 kB]

Get:8 http://security.ubuntu.com/ubuntu xenial-security/restricted amd64 Packages [12.9 kB]

Get:9 http://security.ubuntu.com/ubuntu xenial-security/universe amd64 Packages [229 kB]

Get:10 http://security.ubuntu.com/ubuntu xenial-security/multiverse amd64 Packages [3479 B]

Get:11 http://archive.ubuntu.com/ubuntu xenial/main amd64 Packages [1558 kB]

Get:12 http://archive.ubuntu.com/ubuntu xenial/restricted amd64 Packages [14.1 kB]

Get:13 http://archive.ubuntu.com/ubuntu xenial/universe amd64 Packages [9827 kB]

Get:14 http://archive.ubuntu.com/ubuntu xenial/multiverse amd64 Packages [176 kB]

Get:15 http://archive.ubuntu.com/ubuntu xenial-updates/universe Sources [231 kB]

Get:16 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 Packages [866 kB]

Get:17 http://archive.ubuntu.com/ubuntu xenial-updates/restricted amd64 Packages [13.7 kB]

Get:18 http://archive.ubuntu.com/ubuntu xenial-updates/universe amd64 Packages [719 kB]

Get:19 http://archive.ubuntu.com/ubuntu xenial-updates/multiverse amd64 Packages [18.5 kB]

Get:20 http://archive.ubuntu.com/ubuntu xenial-backports/main amd64 Packages [5174 B]

Get:21 http://archive.ubuntu.com/ubuntu xenial-backports/universe amd64 Packages [7150 B]

Fetched 24.6 MB in 29s (825 kB/s)

Reading package lists…

Removing intermediate container 712d867e5412

—> 1780fbb9121e

Step 4/6 : RUN apt-get -y install apache2 && apt-get clean

—> Running in d9e9198a3e05

Reading package lists…

Building dependency tree…

Reading state information…

The following additional packages will be installed:

apache2-bin apache2-data apache2-utils file ifupdown iproute2

isc-dhcp-client isc-dhcp-common libapr1 libaprutil1 libaprutil1-dbd-sqlite3

libaprutil1-ldap libasn1-8-heimdal libatm1 libdns-export162 libexpat1

libffi6 libgdbm3 libgmp10 libgnutls30 libgssapi3-heimdal libhcrypto4-heimdal

libheimbase1-heimdal libheimntlm0-heimdal libhogweed4 libhx509-5-heimdal

libicu55 libidn11 libisc-export160 libkrb5-26-heimdal libldap-2.4-2

liblua5.1-0 libmagic1 libmnl0 libnettle6 libp11-kit0 libperl5.22

libroken18-heimdal libsasl2-2 libsasl2-modules libsasl2-modules-db

libsqlite3-0 libssl1.0.0 libtasn1-6 libwind0-heimdal libxml2 libxtables11

mime-support netbase openssl perl perl-modules-5.22 rename sgml-base

ssl-cert xml-core

Suggested packages:

www-browser apache2-doc apache2-suexec-pristine | apache2-suexec-custom ufw

ppp rdnssd iproute2-doc resolvconf avahi-autoipd isc-dhcp-client-ddns

apparmor gnutls-bin libsasl2-modules-otp libsasl2-modules-ldap

libsasl2-modules-sql libsasl2-modules-gssapi-mit

| libsasl2-modules-gssapi-heimdal ca-certificates perl-doc

libterm-readline-gnu-perl | libterm-readline-perl-perl make sgml-base-doc

openssl-blacklist debhelper

The following NEW packages will be installed:

apache2 apache2-bin apache2-data apache2-utils file ifupdown iproute2

isc-dhcp-client isc-dhcp-common libapr1 libaprutil1 libaprutil1-dbd-sqlite3

libaprutil1-ldap libasn1-8-heimdal libatm1 libdns-export162 libexpat1

libffi6 libgdbm3 libgmp10 libgnutls30 libgssapi3-heimdal libhcrypto4-heimdal

libheimbase1-heimdal libheimntlm0-heimdal libhogweed4 libhx509-5-heimdal

libicu55 libidn11 libisc-export160 libkrb5-26-heimdal libldap-2.4-2

liblua5.1-0 libmagic1 libmnl0 libnettle6 libp11-kit0 libperl5.22

libroken18-heimdal libsasl2-2 libsasl2-modules libsasl2-modules-db

libsqlite3-0 libssl1.0.0 libtasn1-6 libwind0-heimdal libxml2 libxtables11

mime-support netbase openssl perl perl-modules-5.22 rename sgml-base

ssl-cert xml-core

0 upgraded, 57 newly installed, 0 to remove and 2 not upgraded.

Need to get 22.7 MB of archives.

After this operation, 102 MB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu xenial/main amd64 libatm1 amd64 1:2.5.1-1.5 [24.2 kB]

Get:2 http://archive.ubuntu.com/ubuntu xenial/main amd64 libmnl0 amd64 1.0.3-5 [12.0 kB]

Get:3 http://archive.ubuntu.com/ubuntu xenial/main amd64 libgdbm3 amd64 1.8.3-13.1 [16.9 kB]

Get:4 http://archive.ubuntu.com/ubuntu xenial/main amd64 sgml-base all 1.26+nmu4ubuntu1 [12.5 kB]

Get:5 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 perl-modules-5.22 all 5.22.1-9ubuntu0.2 [2661 kB]

Get:6 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libperl5.22 amd64 5.22.1-9ubuntu0.2 [3391 kB]

Get:7 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 perl amd64 5.22.1-9ubuntu0.2 [237 kB]

Get:8 http://archive.ubuntu.com/ubuntu xenial/main amd64 mime-support all 3.59ubuntu1 [31.0 kB]

Get:9 http://archive.ubuntu.com/ubuntu xenial/main amd64 libapr1 amd64 1.5.2-3 [86.0 kB]

Get:10 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libexpat1 amd64 2.1.0-7ubuntu0.16.04.3 [71.2 kB]

Get:11 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libssl1.0.0 amd64 1.0.2g-1ubuntu4.9 [1085 kB]

Get:12 http://archive.ubuntu.com/ubuntu xenial/main amd64 libaprutil1 amd64 1.5.4-1build1 [77.1 kB]

Get:13 http://archive.ubuntu.com/ubuntu xenial/main amd64 libsqlite3-0 amd64 3.11.0-1ubuntu1 [396 kB]

Get:14 http://archive.ubuntu.com/ubuntu xenial/main amd64 libaprutil1-dbd-sqlite3 amd64 1.5.4-1build1 [10.6 kB]

Get:15 http://archive.ubuntu.com/ubuntu xenial/main amd64 libgmp10 amd64 2:6.1.0+dfsg-2 [240 kB]

Get:16 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libnettle6 amd64 3.2-1ubuntu0.16.04.1 [93.5 kB]

Get:17 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libhogweed4 amd64 3.2-1ubuntu0.16.04.1 [136 kB]

Get:18 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libidn11 amd64 1.32-3ubuntu1.2 [46.5 kB]

Get:19 http://archive.ubuntu.com/ubuntu xenial/main amd64 libffi6 amd64 3.2.1-4 [17.8 kB]

Get:20 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libp11-kit0 amd64 0.23.2-5~ubuntu16.04.1 [105 kB]

Get:21 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libtasn1-6 amd64 4.7-3ubuntu0.16.04.2 [43.3 kB]

Get:22 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libgnutls30 amd64 3.4.10-4ubuntu1.4 [548 kB]

Get:23 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libroken18-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [41.4 kB]

Get:24 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libasn1-8-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [174 kB]

Get:25 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libhcrypto4-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [85.0 kB]

Get:26 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libheimbase1-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [29.3 kB]

Get:27 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libwind0-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [47.8 kB]

Get:28 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libhx509-5-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [107 kB]

Get:29 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libkrb5-26-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [202 kB]

Get:30 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libheimntlm0-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [15.1 kB]

Get:31 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libgssapi3-heimdal amd64 1.7~git20150920+dfsg-4ubuntu1.16.04.1 [96.1 kB]

Get:32 http://archive.ubuntu.com/ubuntu xenial/main amd64 libsasl2-modules-db amd64 2.1.26.dfsg1-14build1 [14.5 kB]

Get:33 http://archive.ubuntu.com/ubuntu xenial/main amd64 libsasl2-2 amd64 2.1.26.dfsg1-14build1 [48.7 kB]

Get:34 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libldap-2.4-2 amd64 2.4.42+dfsg-2ubuntu3.2 [160 kB]

Get:35 http://archive.ubuntu.com/ubuntu xenial/main amd64 libaprutil1-ldap amd64 1.5.4-1build1 [8720 B]

Get:36 http://archive.ubuntu.com/ubuntu xenial/main amd64 liblua5.1-0 amd64 5.1.5-8ubuntu1 [102 kB]

Get:37 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libicu55 amd64 55.1-7ubuntu0.3 [7658 kB]

Get:38 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libxml2 amd64 2.9.3+dfsg1-1ubuntu0.3 [697 kB]

Get:39 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 apache2-bin amd64 2.4.18-2ubuntu3.5 [925 kB]

Get:40 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 apache2-utils amd64 2.4.18-2ubuntu3.5 [82.3 kB]

Get:41 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 apache2-data all 2.4.18-2ubuntu3.5 [162 kB]

Get:42 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 apache2 amd64 2.4.18-2ubuntu3.5 [86.7 kB]

Get:43 http://archive.ubuntu.com/ubuntu xenial/main amd64 libmagic1 amd64 1:5.25-2ubuntu1 [216 kB]

Get:44 http://archive.ubuntu.com/ubuntu xenial/main amd64 file amd64 1:5.25-2ubuntu1 [21.2 kB]

Get:45 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 iproute2 amd64 4.3.0-1ubuntu3.16.04.2 [522 kB]

Get:46 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 ifupdown amd64 0.8.10ubuntu1.2 [54.9 kB]

Get:47 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libisc-export160 amd64 1:9.10.3.dfsg.P4-8ubuntu1.9 [153 kB]

Get:48 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libdns-export162 amd64 1:9.10.3.dfsg.P4-8ubuntu1.9 [666 kB]

Get:49 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 isc-dhcp-client amd64 4.3.3-5ubuntu12.7 [223 kB]

Get:50 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 isc-dhcp-common amd64 4.3.3-5ubuntu12.7 [105 kB]

Get:51 http://archive.ubuntu.com/ubuntu xenial/main amd64 libxtables11 amd64 1.6.0-2ubuntu3 [27.2 kB]

Get:52 http://archive.ubuntu.com/ubuntu xenial/main amd64 netbase all 5.3 [12.9 kB]

Get:53 http://archive.ubuntu.com/ubuntu xenial/main amd64 libsasl2-modules amd64 2.1.26.dfsg1-14build1 [47.5 kB]

Get:54 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 openssl amd64 1.0.2g-1ubuntu4.9 [492 kB]

Get:55 http://archive.ubuntu.com/ubuntu xenial/main amd64 xml-core all 0.13+nmu2 [23.3 kB]

Get:56 http://archive.ubuntu.com/ubuntu xenial/main amd64 rename all 0.20-4 [12.0 kB]

Get:57 http://archive.ubuntu.com/ubuntu xenial/main amd64 ssl-cert all 1.0.37 [16.9 kB]

debconf: delaying package configuration, since apt-utils is not installed

Fetched 22.7 MB in 1min 49s (206 kB/s)

Selecting previously unselected package libatm1:amd64.

(Reading database … 4768 files and directories currently installed.)

Preparing to unpack …/libatm1_1%3a2.5.1-1.5_amd64.deb …

Unpacking libatm1:amd64 (1:2.5.1-1.5) …

Selecting previously unselected package libmnl0:amd64.

Preparing to unpack …/libmnl0_1.0.3-5_amd64.deb …

Unpacking libmnl0:amd64 (1.0.3-5) …

Selecting previously unselected package libgdbm3:amd64.

Preparing to unpack …/libgdbm3_1.8.3-13.1_amd64.deb …

Unpacking libgdbm3:amd64 (1.8.3-13.1) …

Selecting previously unselected package sgml-base.

Preparing to unpack …/sgml-base_1.26+nmu4ubuntu1_all.deb …

Unpacking sgml-base (1.26+nmu4ubuntu1) …

Selecting previously unselected package perl-modules-5.22.

Preparing to unpack …/perl-modules-5.22_5.22.1-9ubuntu0.2_all.deb …

Unpacking perl-modules-5.22 (5.22.1-9ubuntu0.2) …

Selecting previously unselected package libperl5.22:amd64.

Preparing to unpack …/libperl5.22_5.22.1-9ubuntu0.2_amd64.deb …

Unpacking libperl5.22:amd64 (5.22.1-9ubuntu0.2) …

Selecting previously unselected package perl.

Preparing to unpack …/perl_5.22.1-9ubuntu0.2_amd64.deb …

Unpacking perl (5.22.1-9ubuntu0.2) …

Selecting previously unselected package mime-support.

Preparing to unpack …/mime-support_3.59ubuntu1_all.deb …

Unpacking mime-support (3.59ubuntu1) …

Selecting previously unselected package libapr1:amd64.

Preparing to unpack …/libapr1_1.5.2-3_amd64.deb …

Unpacking libapr1:amd64 (1.5.2-3) …

Selecting previously unselected package libexpat1:amd64.

Preparing to unpack …/libexpat1_2.1.0-7ubuntu0.16.04.3_amd64.deb …

Unpacking libexpat1:amd64 (2.1.0-7ubuntu0.16.04.3) …

Selecting previously unselected package libssl1.0.0:amd64.

Preparing to unpack …/libssl1.0.0_1.0.2g-1ubuntu4.9_amd64.deb …

Unpacking libssl1.0.0:amd64 (1.0.2g-1ubuntu4.9) …

Selecting previously unselected package libaprutil1:amd64.

Preparing to unpack …/libaprutil1_1.5.4-1build1_amd64.deb …

Unpacking libaprutil1:amd64 (1.5.4-1build1) …

Selecting previously unselected package libsqlite3-0:amd64.

Preparing to unpack …/libsqlite3-0_3.11.0-1ubuntu1_amd64.deb …

Unpacking libsqlite3-0:amd64 (3.11.0-1ubuntu1) …

Selecting previously unselected package libaprutil1-dbd-sqlite3:amd64.

Preparing to unpack …/libaprutil1-dbd-sqlite3_1.5.4-1build1_amd64.deb …

Unpacking libaprutil1-dbd-sqlite3:amd64 (1.5.4-1build1) …

Selecting previously unselected package libgmp10:amd64.

Preparing to unpack …/libgmp10_2%3a6.1.0+dfsg-2_amd64.deb …

Unpacking libgmp10:amd64 (2:6.1.0+dfsg-2) …

Selecting previously unselected package libnettle6:amd64.

Preparing to unpack …/libnettle6_3.2-1ubuntu0.16.04.1_amd64.deb …

Unpacking libnettle6:amd64 (3.2-1ubuntu0.16.04.1) …

Selecting previously unselected package libhogweed4:amd64.

Preparing to unpack …/libhogweed4_3.2-1ubuntu0.16.04.1_amd64.deb …

Unpacking libhogweed4:amd64 (3.2-1ubuntu0.16.04.1) …

Selecting previously unselected package libidn11:amd64.

Preparing to unpack …/libidn11_1.32-3ubuntu1.2_amd64.deb …

Unpacking libidn11:amd64 (1.32-3ubuntu1.2) …

Selecting previously unselected package libffi6:amd64.

Preparing to unpack …/libffi6_3.2.1-4_amd64.deb …

Unpacking libffi6:amd64 (3.2.1-4) …

Selecting previously unselected package libp11-kit0:amd64.

Preparing to unpack …/libp11-kit0_0.23.2-5~ubuntu16.04.1_amd64.deb …

Unpacking libp11-kit0:amd64 (0.23.2-5~ubuntu16.04.1) …

Selecting previously unselected package libtasn1-6:amd64.

Preparing to unpack …/libtasn1-6_4.7-3ubuntu0.16.04.2_amd64.deb …

Unpacking libtasn1-6:amd64 (4.7-3ubuntu0.16.04.2) …

Selecting previously unselected package libgnutls30:amd64.

Preparing to unpack …/libgnutls30_3.4.10-4ubuntu1.4_amd64.deb …

Unpacking libgnutls30:amd64 (3.4.10-4ubuntu1.4) …

Selecting previously unselected package libroken18-heimdal:amd64.

Preparing to unpack …/libroken18-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libroken18-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libasn1-8-heimdal:amd64.

Preparing to unpack …/libasn1-8-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libasn1-8-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libhcrypto4-heimdal:amd64.

Preparing to unpack …/libhcrypto4-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libhcrypto4-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libheimbase1-heimdal:amd64.

Preparing to unpack …/libheimbase1-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libheimbase1-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libwind0-heimdal:amd64.

Preparing to unpack …/libwind0-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libwind0-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libhx509-5-heimdal:amd64.

Preparing to unpack …/libhx509-5-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libhx509-5-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libkrb5-26-heimdal:amd64.

Preparing to unpack …/libkrb5-26-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libkrb5-26-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libheimntlm0-heimdal:amd64.

Preparing to unpack …/libheimntlm0-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libheimntlm0-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libgssapi3-heimdal:amd64.

Preparing to unpack …/libgssapi3-heimdal_1.7~git20150920+dfsg-4ubuntu1.16.04.1_amd64.deb …

Unpacking libgssapi3-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Selecting previously unselected package libsasl2-modules-db:amd64.

Preparing to unpack …/libsasl2-modules-db_2.1.26.dfsg1-14build1_amd64.deb …

Unpacking libsasl2-modules-db:amd64 (2.1.26.dfsg1-14build1) …

Selecting previously unselected package libsasl2-2:amd64.

Preparing to unpack …/libsasl2-2_2.1.26.dfsg1-14build1_amd64.deb …

Unpacking libsasl2-2:amd64 (2.1.26.dfsg1-14build1) …

Selecting previously unselected package libldap-2.4-2:amd64.

Preparing to unpack …/libldap-2.4-2_2.4.42+dfsg-2ubuntu3.2_amd64.deb …

Unpacking libldap-2.4-2:amd64 (2.4.42+dfsg-2ubuntu3.2) …

Selecting previously unselected package libaprutil1-ldap:amd64.

Preparing to unpack …/libaprutil1-ldap_1.5.4-1build1_amd64.deb …

Unpacking libaprutil1-ldap:amd64 (1.5.4-1build1) …

Selecting previously unselected package liblua5.1-0:amd64.

Preparing to unpack …/liblua5.1-0_5.1.5-8ubuntu1_amd64.deb …

Unpacking liblua5.1-0:amd64 (5.1.5-8ubuntu1) …

Selecting previously unselected package libicu55:amd64.

Preparing to unpack …/libicu55_55.1-7ubuntu0.3_amd64.deb …

Unpacking libicu55:amd64 (55.1-7ubuntu0.3) …

Selecting previously unselected package libxml2:amd64.

Preparing to unpack …/libxml2_2.9.3+dfsg1-1ubuntu0.3_amd64.deb …

Unpacking libxml2:amd64 (2.9.3+dfsg1-1ubuntu0.3) …

Selecting previously unselected package apache2-bin.

Preparing to unpack …/apache2-bin_2.4.18-2ubuntu3.5_amd64.deb …

Unpacking apache2-bin (2.4.18-2ubuntu3.5) …

Selecting previously unselected package apache2-utils.

Preparing to unpack …/apache2-utils_2.4.18-2ubuntu3.5_amd64.deb …

Unpacking apache2-utils (2.4.18-2ubuntu3.5) …

Selecting previously unselected package apache2-data.

Preparing to unpack …/apache2-data_2.4.18-2ubuntu3.5_all.deb …

Unpacking apache2-data (2.4.18-2ubuntu3.5) …

Selecting previously unselected package apache2.

Preparing to unpack …/apache2_2.4.18-2ubuntu3.5_amd64.deb …

Unpacking apache2 (2.4.18-2ubuntu3.5) …

Selecting previously unselected package libmagic1:amd64.

Preparing to unpack …/libmagic1_1%3a5.25-2ubuntu1_amd64.deb …

Unpacking libmagic1:amd64 (1:5.25-2ubuntu1) …

Selecting previously unselected package file.

Preparing to unpack …/file_1%3a5.25-2ubuntu1_amd64.deb …

Unpacking file (1:5.25-2ubuntu1) …

Selecting previously unselected package iproute2.

Preparing to unpack …/iproute2_4.3.0-1ubuntu3.16.04.2_amd64.deb …

Unpacking iproute2 (4.3.0-1ubuntu3.16.04.2) …

Selecting previously unselected package ifupdown.

Preparing to unpack …/ifupdown_0.8.10ubuntu1.2_amd64.deb …

Unpacking ifupdown (0.8.10ubuntu1.2) …

Selecting previously unselected package libisc-export160.

Preparing to unpack …/libisc-export160_1%3a9.10.3.dfsg.P4-8ubuntu1.9_amd64.deb …

Unpacking libisc-export160 (1:9.10.3.dfsg.P4-8ubuntu1.9) …

Selecting previously unselected package libdns-export162.

Preparing to unpack …/libdns-export162_1%3a9.10.3.dfsg.P4-8ubuntu1.9_amd64.deb …

Unpacking libdns-export162 (1:9.10.3.dfsg.P4-8ubuntu1.9) …

Selecting previously unselected package isc-dhcp-client.

Preparing to unpack …/isc-dhcp-client_4.3.3-5ubuntu12.7_amd64.deb …

Unpacking isc-dhcp-client (4.3.3-5ubuntu12.7) …

Selecting previously unselected package isc-dhcp-common.

Preparing to unpack …/isc-dhcp-common_4.3.3-5ubuntu12.7_amd64.deb …

Unpacking isc-dhcp-common (4.3.3-5ubuntu12.7) …

Selecting previously unselected package libxtables11:amd64.

Preparing to unpack …/libxtables11_1.6.0-2ubuntu3_amd64.deb …

Unpacking libxtables11:amd64 (1.6.0-2ubuntu3) …

Selecting previously unselected package netbase.

Preparing to unpack …/archives/netbase_5.3_all.deb …

Unpacking netbase (5.3) …

Selecting previously unselected package libsasl2-modules:amd64.

Preparing to unpack …/libsasl2-modules_2.1.26.dfsg1-14build1_amd64.deb …

Unpacking libsasl2-modules:amd64 (2.1.26.dfsg1-14build1) …

Selecting previously unselected package openssl.

Preparing to unpack …/openssl_1.0.2g-1ubuntu4.9_amd64.deb …

Unpacking openssl (1.0.2g-1ubuntu4.9) …

Selecting previously unselected package xml-core.

Preparing to unpack …/xml-core_0.13+nmu2_all.deb …

Unpacking xml-core (0.13+nmu2) …

Selecting previously unselected package rename.

Preparing to unpack …/archives/rename_0.20-4_all.deb …

Unpacking rename (0.20-4) …

Selecting previously unselected package ssl-cert.

Preparing to unpack …/ssl-cert_1.0.37_all.deb …

Unpacking ssl-cert (1.0.37) …

Processing triggers for libc-bin (2.23-0ubuntu9) …

Processing triggers for systemd (229-4ubuntu21) …

Setting up libatm1:amd64 (1:2.5.1-1.5) …

Setting up libmnl0:amd64 (1.0.3-5) …

Setting up libgdbm3:amd64 (1.8.3-13.1) …

Setting up sgml-base (1.26+nmu4ubuntu1) …

Setting up perl-modules-5.22 (5.22.1-9ubuntu0.2) …

Setting up libperl5.22:amd64 (5.22.1-9ubuntu0.2) …

Setting up perl (5.22.1-9ubuntu0.2) …

update-alternatives: using /usr/bin/prename to provide /usr/bin/rename (rename) in auto mode

Setting up mime-support (3.59ubuntu1) …

Setting up libapr1:amd64 (1.5.2-3) …

Setting up libexpat1:amd64 (2.1.0-7ubuntu0.16.04.3) …

Setting up libssl1.0.0:amd64 (1.0.2g-1ubuntu4.9) …

debconf: unable to initialize frontend: Dialog

debconf: (TERM is not set, so the dialog frontend is not usable.)

debconf: falling back to frontend: Readline

Setting up libaprutil1:amd64 (1.5.4-1build1) …

Setting up libsqlite3-0:amd64 (3.11.0-1ubuntu1) …

Setting up libaprutil1-dbd-sqlite3:amd64 (1.5.4-1build1) …

Setting up libgmp10:amd64 (2:6.1.0+dfsg-2) …

Setting up libnettle6:amd64 (3.2-1ubuntu0.16.04.1) …

Setting up libhogweed4:amd64 (3.2-1ubuntu0.16.04.1) …

Setting up libidn11:amd64 (1.32-3ubuntu1.2) …

Setting up libffi6:amd64 (3.2.1-4) …

Setting up libp11-kit0:amd64 (0.23.2-5~ubuntu16.04.1) …

Setting up libtasn1-6:amd64 (4.7-3ubuntu0.16.04.2) …

Setting up libgnutls30:amd64 (3.4.10-4ubuntu1.4) …

Setting up libroken18-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libasn1-8-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libhcrypto4-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libheimbase1-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libwind0-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libhx509-5-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libkrb5-26-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libheimntlm0-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libgssapi3-heimdal:amd64 (1.7~git20150920+dfsg-4ubuntu1.16.04.1) …

Setting up libsasl2-modules-db:amd64 (2.1.26.dfsg1-14build1) …

Setting up libsasl2-2:amd64 (2.1.26.dfsg1-14build1) …

Setting up libldap-2.4-2:amd64 (2.4.42+dfsg-2ubuntu3.2) …

Setting up libaprutil1-ldap:amd64 (1.5.4-1build1) …

Setting up liblua5.1-0:amd64 (5.1.5-8ubuntu1) …

Setting up libicu55:amd64 (55.1-7ubuntu0.3) …

Setting up libxml2:amd64 (2.9.3+dfsg1-1ubuntu0.3) …

Setting up apache2-bin (2.4.18-2ubuntu3.5) …

Setting up apache2-utils (2.4.18-2ubuntu3.5) …

Setting up apache2-data (2.4.18-2ubuntu3.5) …

Setting up apache2 (2.4.18-2ubuntu3.5) …

Enabling module mpm_event.

Enabling module authz_core.

Enabling module authz_host.

Enabling module authn_core.

Enabling module auth_basic.

Enabling module access_compat.

Enabling module authn_file.

Enabling module authz_user.

Enabling module alias.

Enabling module dir.

Enabling module autoindex.

Enabling module env.

Enabling module mime.

Enabling module negotiation.

Enabling module setenvif.

Enabling module filter.

Enabling module deflate.

Enabling module status.

Enabling conf charset.

Enabling conf localized-error-pages.

Enabling conf other-vhosts-access-log.

Enabling conf security.

Enabling conf serve-cgi-bin.

Enabling site 000-default.

invoke-rc.d: could not determine current runlevel

invoke-rc.d: policy-rc.d denied execution of start.

Setting up libmagic1:amd64 (1:5.25-2ubuntu1) …

Setting up file (1:5.25-2ubuntu1) …

Setting up iproute2 (4.3.0-1ubuntu3.16.04.2) …

Setting up ifupdown (0.8.10ubuntu1.2) …

Creating /etc/network/interfaces.

Setting up libisc-export160 (1:9.10.3.dfsg.P4-8ubuntu1.9) …

Setting up libdns-export162 (1:9.10.3.dfsg.P4-8ubuntu1.9) …

Setting up isc-dhcp-client (4.3.3-5ubuntu12.7) …

Setting up isc-dhcp-common (4.3.3-5ubuntu12.7) …

Setting up libxtables11:amd64 (1.6.0-2ubuntu3) …

Setting up netbase (5.3) …

Setting up libsasl2-modules:amd64 (2.1.26.dfsg1-14build1) …

Setting up openssl (1.0.2g-1ubuntu4.9) …

Setting up xml-core (0.13+nmu2) …

Setting up rename (0.20-4) …

update-alternatives: using /usr/bin/file-rename to provide /usr/bin/rename (rename) in auto mode

Setting up ssl-cert (1.0.37) …

debconf: unable to initialize frontend: Dialog

debconf: (TERM is not set, so the dialog frontend is not usable.)

debconf: falling back to frontend: Readline

Processing triggers for libc-bin (2.23-0ubuntu9) …

Processing triggers for systemd (229-4ubuntu21) …

Processing triggers for sgml-base (1.26+nmu4ubuntu1) …

Removing intermediate container d9e9198a3e05

—> 80596dd5c11e

Step 5/6 : RUN echo “Apache running!!” >> /var/www/html/index.html

—> Running in 2b2892574b8c

Removing intermediate container 2b2892574b8c

—> 4559135d9b47

Step 6/6 : EXPOSE 80

—> Running in 9427afe144bb

Removing intermediate container 9427afe144bb

—> 17334a666342

Successfully built 17334a666342

Successfully tagged ubuntu16.04/apache2:latest

vskumar@ubuntu:~/apache1$

=============== You can see the contained id:17334a666342 ====>

Without error it has been built with tagged ubuntu16.04/apache2:latest.

Step 2: Check the Apache image

Let me list the current images:

=========== Current docker images ====>

vskumar@ubuntu:~/apache1$

vskumar@ubuntu:~/apache1$ sudo docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu16.04/apache2 latest 17334a666342 8 minutes ago 261MB

ubuntu 16.04 20c44cd7596f 2 weeks ago 123MB

ubuntu latest 20c44cd7596f 2 weeks ago 123MB

vskumar@ubuntu:~/apache1$

===============================>

Now, Let us check the docker networks.

======= Docker networks list ======>

vskumar@ubuntu:~/apache1$ sudo docker network ls

NETWORK ID NAME DRIVER SCOPE

c16796e9072f bridge bridge local

b12df1d5fa4c host host local

70b971906469 none null local

vskumar@ubuntu:~/apache1$

=========================>

For containers network specifications, please visit:

https://docs.docker.com/engine/userguide/networking/#the-default-bridge-network

Step 3: Connect the Apache container/image with network

Now to get the services connected through docker bridge we need to connect the containers to the network bridge as below:

==== Named the latest image as container1 connected to default bridge ====>

vskumar@ubuntu:~/apache1$ sudo docker run -itd –name=container1 ubuntu16.04/apache2

6df11fd4bbffa4c41fcef86bb314c8796d663827cf85321b6bbc2a803d0de58b

vskumar@ubuntu:~/apache1$

==========================>

Now let us inspect the networks as below:

====== See the above image is attached to the bridge network as below =======>

vskumar@ubuntu:~/apache1$

vskumar@ubuntu:~/apache1$ sudo docker network inspect bridge

[

{

“Name”: “bridge”,

“Id”: “c16796e9072f2a9bd3273ee6733260a7be8c34cc72099eb496180d75e4298bf8”,

“Created”: “2017-12-04T03:17:49.732438566-08:00”,

“Scope”: “local”,

“Driver”: “bridge”,

“EnableIPv6”: false,

“IPAM”: {

“Driver”: “default”,

“Options”: null,

“Config”: [

{

“Subnet”: “172.17.0.0/16”,

“Gateway”: “172.17.0.1”

}

]

},

“Internal”: false,

“Attachable”: false,

“Ingress”: false,

“ConfigFrom”: {

“Network”: “”

},

“ConfigOnly”: false,

“Containers”: {

“6df11fd4bbffa4c41fcef86bb314c8796d663827cf85321b6bbc2a803d0de58b”: {

“Name”: “container1”,

“EndpointID”: “fa1b98a6a8455d7bcbe3260672123dd9ba6339cec25b4992031d5815ba48affa”,

“MacAddress”: “02:42:ac:11:00:02”,

“IPv4Address”: “172.17.0.2/16”,

“IPv6Address”: “”

}

},

“Options”: {

“com.docker.network.bridge.default_bridge”: “true”,

“com.docker.network.bridge.enable_icc”: “true”,

“com.docker.network.bridge.enable_ip_masquerade”: “true”,

“com.docker.network.bridge.host_binding_ipv4”: “0.0.0.0”,

“com.docker.network.bridge.name”: “docker0”,

“com.docker.network.driver.mtu”: “1500”

},

“Labels”: {}

}

]

vskumar@ubuntu:~/apache1$

=============================>

Observe the contaner1, section. Its ip is recorded.

Let us start the container now:

=================>

vskumar@ubuntu:~/apache1$ sudo docker run -i -t fedora/jenkins bin/bash

root@6df11fd4bbff:/#

root@6df11fd4bbff:/# ls

bin dev home lib64 mnt proc run srv tmp var

boot etc lib media opt root sbin sys usr

root@6df11fd4bbff:/#

================>

Let us check its /etc/hosts file contents.

=============================>

root@6df11fd4bbff:/# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 6df11fd4bbff

root@6df11fd4bbff:/#

=============================>

Let us add some packages to this container.

To curl to any IP, we need curl utility on this container.

======== Installing curl utility on container1 ====>

root@6df11fd4bbff:/# apt-get install curl

Reading package lists… Done

Building dependency tree

Reading state information… Done

The following additional packages will be installed:

ca-certificates krb5-locales libcurl3-gnutls libgssapi-krb5-2 libk5crypto3 libkeyutils1

libkrb5-3 libkrb5support0 librtmp1

Suggested packages:

krb5-doc krb5-user

The following NEW packages will be installed:

ca-certificates curl krb5-locales libcurl3-gnutls libgssapi-krb5-2 libk5crypto3 libkeyutils1

libkrb5-3 libkrb5support0 librtmp1

0 upgraded, 10 newly installed, 0 to remove and 2 not upgraded.

Need to get 1072 kB of archives.

After this operation, 6220 kB of additional disk space will be used.

Do you want to continue? [Y/n] y

Get:1 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 ca-certificates all 20170717~16.04.1 [168 kB]

Get:2 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 krb5-locales all 1.13.2+dfsg-5ubuntu2 [13.2 kB]

Get:3 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libkrb5support0 amd64 1.13.2+dfsg-5ubuntu2 [30.8 kB]

Get:4 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libk5crypto3 amd64 1.13.2+dfsg-5ubuntu2 [81.2 kB]

Get:5 http://archive.ubuntu.com/ubuntu xenial/main amd64 libkeyutils1 amd64 1.5.9-8ubuntu1 [9904 B]

Get:6 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libkrb5-3 amd64 1.13.2+dfsg-5ubuntu2 [273 kB]

Get:7 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libgssapi-krb5-2 amd64 1.13.2+dfsg-5ubuntu2 [120 kB]

Get:8 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 librtmp1 amd64 2.4+20151223.gitfa8646d-1ubuntu0.1 [54.4 kB]

Get:9 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 libcurl3-gnutls amd64 7.47.0-1ubuntu2.5 [184 kB]

Get:10 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 curl amd64 7.47.0-1ubuntu2.5 [138 kB]

Fetched 1072 kB in 3s (272 kB/s)

debconf: delaying package configuration, since apt-utils is not installed

Selecting previously unselected package ca-certificates.

(Reading database … 7907 files and directories currently installed.)

Preparing to unpack …/ca-certificates_20170717~16.04.1_all.deb …

Unpacking ca-certificates (20170717~16.04.1) …

Selecting previously unselected package krb5-locales.

Preparing to unpack …/krb5-locales_1.13.2+dfsg-5ubuntu2_all.deb …

Unpacking krb5-locales (1.13.2+dfsg-5ubuntu2) …

Selecting previously unselected package libkrb5support0:amd64.

Preparing to unpack …/libkrb5support0_1.13.2+dfsg-5ubuntu2_amd64.deb …

Unpacking libkrb5support0:amd64 (1.13.2+dfsg-5ubuntu2) …

Selecting previously unselected package libk5crypto3:amd64.

Preparing to unpack …/libk5crypto3_1.13.2+dfsg-5ubuntu2_amd64.deb …

Unpacking libk5crypto3:amd64 (1.13.2+dfsg-5ubuntu2) …

Selecting previously unselected package libkeyutils1:amd64.

Preparing to unpack …/libkeyutils1_1.5.9-8ubuntu1_amd64.deb …

Unpacking libkeyutils1:amd64 (1.5.9-8ubuntu1) …

Selecting previously unselected package libkrb5-3:amd64.

Preparing to unpack …/libkrb5-3_1.13.2+dfsg-5ubuntu2_amd64.deb …

Unpacking libkrb5-3:amd64 (1.13.2+dfsg-5ubuntu2) …

Selecting previously unselected package libgssapi-krb5-2:amd64.

Preparing to unpack …/libgssapi-krb5-2_1.13.2+dfsg-5ubuntu2_amd64.deb …

Unpacking libgssapi-krb5-2:amd64 (1.13.2+dfsg-5ubuntu2) …

Selecting previously unselected package librtmp1:amd64.

Preparing to unpack …/librtmp1_2.4+20151223.gitfa8646d-1ubuntu0.1_amd64.deb …

Unpacking librtmp1:amd64 (2.4+20151223.gitfa8646d-1ubuntu0.1) …

Selecting previously unselected package libcurl3-gnutls:amd64.

Preparing to unpack …/libcurl3-gnutls_7.47.0-1ubuntu2.5_amd64.deb …

Unpacking libcurl3-gnutls:amd64 (7.47.0-1ubuntu2.5) …

Selecting previously unselected package curl.

Preparing to unpack …/curl_7.47.0-1ubuntu2.5_amd64.deb …

Unpacking curl (7.47.0-1ubuntu2.5) …

Processing triggers for libc-bin (2.23-0ubuntu9) …

Setting up ca-certificates (20170717~16.04.1) …

debconf: unable to initialize frontend: Dialog

debconf: (No usable dialog-like program is installed, so the dialog based frontend cannot be used. at /usr/share/perl5/Debconf/FrontEnd/Dialog.pm line 76.)

debconf: falling back to frontend: Readline

Setting up krb5-locales (1.13.2+dfsg-5ubuntu2) …

Setting up libkrb5support0:amd64 (1.13.2+dfsg-5ubuntu2) …

Setting up libk5crypto3:amd64 (1.13.2+dfsg-5ubuntu2) …

Setting up libkeyutils1:amd64 (1.5.9-8ubuntu1) …

Setting up libkrb5-3:amd64 (1.13.2+dfsg-5ubuntu2) …

Setting up libgssapi-krb5-2:amd64 (1.13.2+dfsg-5ubuntu2) …

Setting up librtmp1:amd64 (2.4+20151223.gitfa8646d-1ubuntu0.1) …

Setting up libcurl3-gnutls:amd64 (7.47.0-1ubuntu2.5) …

Setting up curl (7.47.0-1ubuntu2.5) …

Processing triggers for ca-certificates (20170717~16.04.1) …

Updating certificates in /etc/ssl/certs…

148 added, 0 removed; done.

Running hooks in /etc/ca-certificates/update.d…

done.

Processing triggers for libc-bin (2.23-0ubuntu9) …

root@6df11fd4bbff:/#

================= End of curl installation ====>

Step 4: Check the container connectivity in docker network

Now, let me ping this container from the docker host to check its connectivity.

===========================>

vskumar@ubuntu:~$

vskumar@ubuntu:~$ ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

From 172.17.0.1 icmp_seq=9 Destination Host Unreachable

From 172.17.0.1 icmp_seq=10 Destination Host Unreachable

From 172.17.0.1 icmp_seq=11 Destination Host Unreachable

From 172.17.0.1 icmp_seq=12 Destination Host Unreachable

From 172.17.0.1 icmp_seq=13 Destination Host Unreachable

From 172.17.0.1 icmp_seq=14 Destination Host Unreachable

From 172.17.0.1 icmp_seq=15 Destination Host Unreachable

^C

— 172.17.0.2 ping statistics —

30 packets transmitted, 0 received, +7 errors, 100% packet loss, time 29695ms

pipe 15

vskumar@ubuntu:~$

========= It means communication is established to Docker host/engine ======>

Now, let me exit the container interactive sessions as below:

=========== Exit container1 ======>

root@6df11fd4bbff:/#

root@6df11fd4bbff:/# exit

exit

vskumar@ubuntu:~/apache1$

===========================>

Now let me ping this container1 from docker host as below and check the results:

===========================>

vskumar@ubuntu:~$

vskumar@ubuntu:~$ ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

From 172.17.0.1 icmp_seq=9 Destination Host Unreachable

From 172.17.0.1 icmp_seq=10 Destination Host Unreachable

From 172.17.0.1 icmp_seq=11 Destination Host Unreachable

From 172.17.0.1 icmp_seq=12 Destination Host Unreachable

From 172.17.0.1 icmp_seq=13 Destination Host Unreachable

From 172.17.0.1 icmp_seq=14 Destination Host Unreachable

From 172.17.0.1 icmp_seq=15 Destination Host Unreachable

^C

— 172.17.0.2 ping statistics —

30 packets transmitted, 0 received, +7 errors, 100% packet loss, time 29695ms

pipe 15

vskumar@ubuntu:~$

==== It shows unreachable due to the container1 is stopped =====>

Now let us check the containers status as below:

===== Containers status ======>

vskumar@ubuntu:~$ sudo docker ps -a

[sudo] password for vskumar:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6df11fd4bbff ubuntu16.04/apache2 “/bin/bash” 35 minutes ago Exited (0) 7 minutes ago container1

76ccfb044dd1 ubuntu16.04/apache2 “/bin/bash” About an hour ago Exited (0) About an hour ago upbeat_chandrasekhar

vskumar@ubuntu:~$

========= So it shows as Container1 is exited =====>

The outcome of this exercise is; to know whenever the container is running, can we ping to it.

Let us try to run the container in non-interactive mode and check its ping status:

======================>

vskumar@ubuntu:~/apache1$

vskumar@ubuntu:~/apache1$ sudo docker start container1

container1

vskumar@ubuntu:~/apache1$ sudo docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6df11fd4bbff ubuntu16.04/apache2 “/bin/bash” 38 minutes ago Up 5 seconds 80/tcp container1

76ccfb044dd1 ubuntu16.04/apache2 “/bin/bash” About an hour ago Exited (0) About an hour ago upbeat_chandrasekhar

vskumar@ubuntu:~/apache1$

===================>

It shows as replying from it :

====== Pinging the non-interactive container ====>

vskumar@ubuntu:~$

vskumar@ubuntu:~$ ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.296 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.161 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.142 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.147 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.144 ms

64 bytes from 172.17.0.2: icmp_seq=6 ttl=64 time=0.145 ms

^C

— 172.17.0.2 ping statistics —

6 packets transmitted, 6 received, 0% packet loss, time 5113ms

rtt min/avg/max/mdev = 0.142/0.172/0.296/0.057 ms

vskumar@ubuntu:~$

===============================>

So we have seen its communication by both modes; interactive and non-interactive.

Now, let us check the status of apache2 on container1 and make it ‘active’ as below from the interactive mode:

========== Apache2 status on container1 ======>

root@6df11fd4bbff:/#

root@6df11fd4bbff:/#

root@6df11fd4bbff:/# service apache2 status

* apache2 is not running

root@6df11fd4bbff:/# service apache2 start

* Starting Apache httpd web server apache2 AH00558: apache2: Could not reliably determine the server’s fully qualified domain name, using 172.17.0.2. Set the ‘ServerName’ directive globally to suppress this message

*

root@6df11fd4bbff:/# service apache2 status

* apache2 is running

root@6df11fd4bbff:/#

======================>

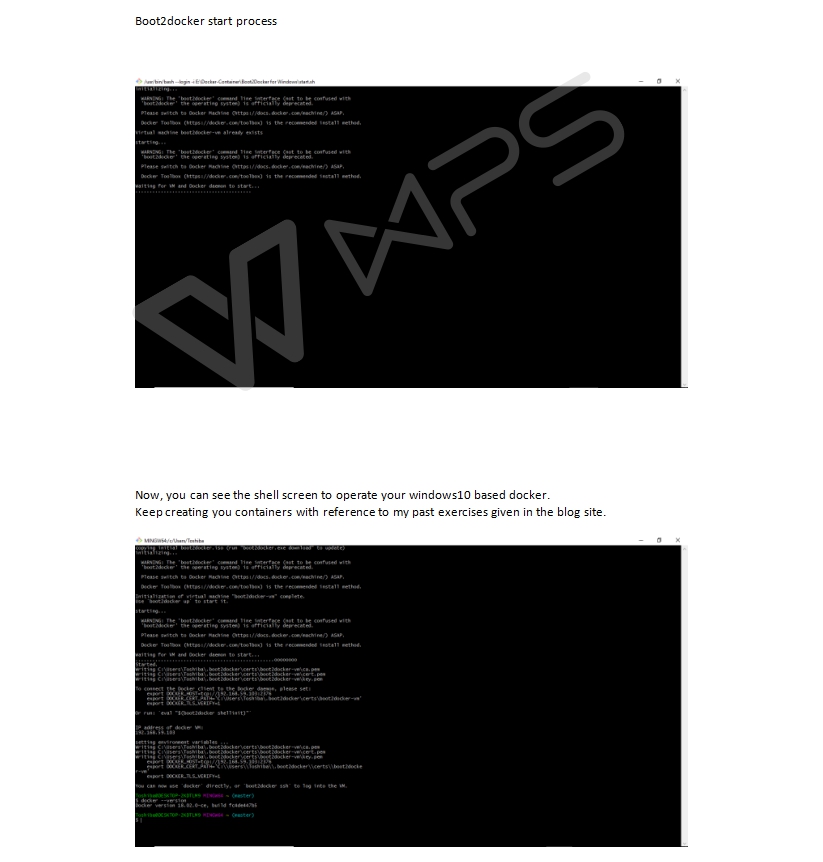

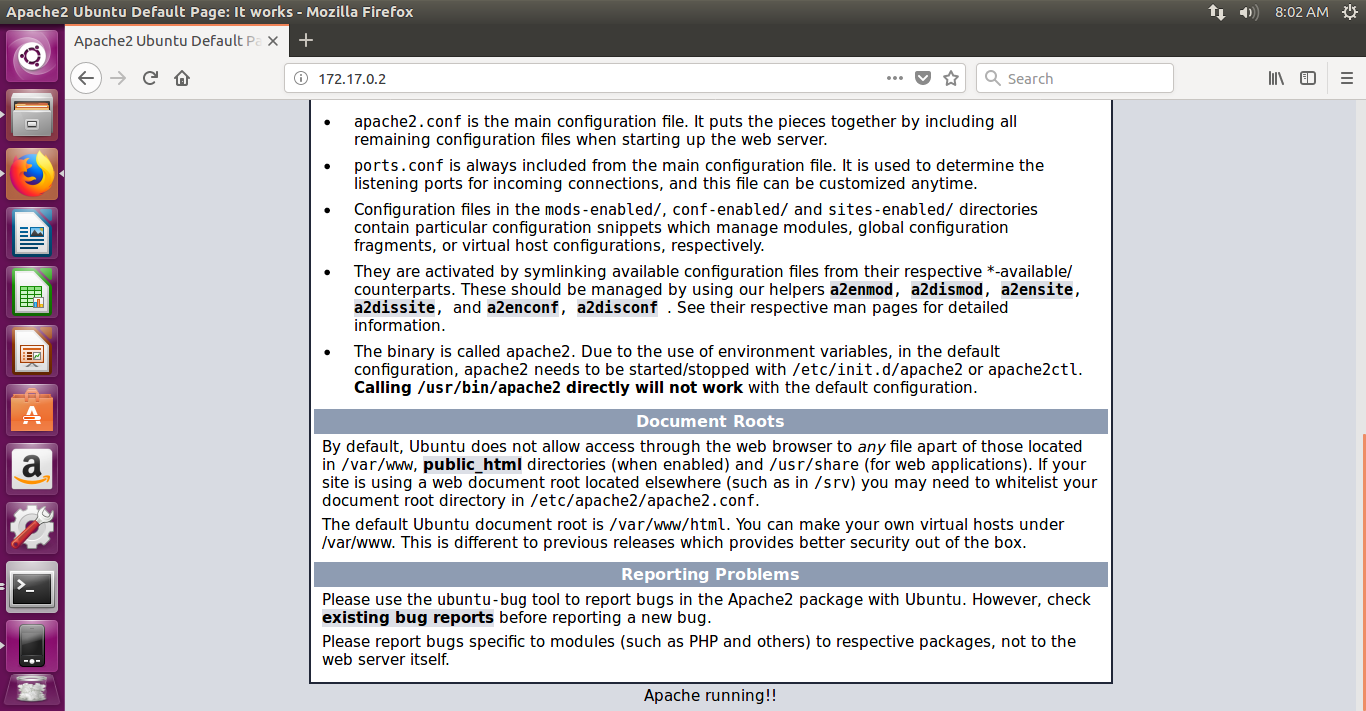

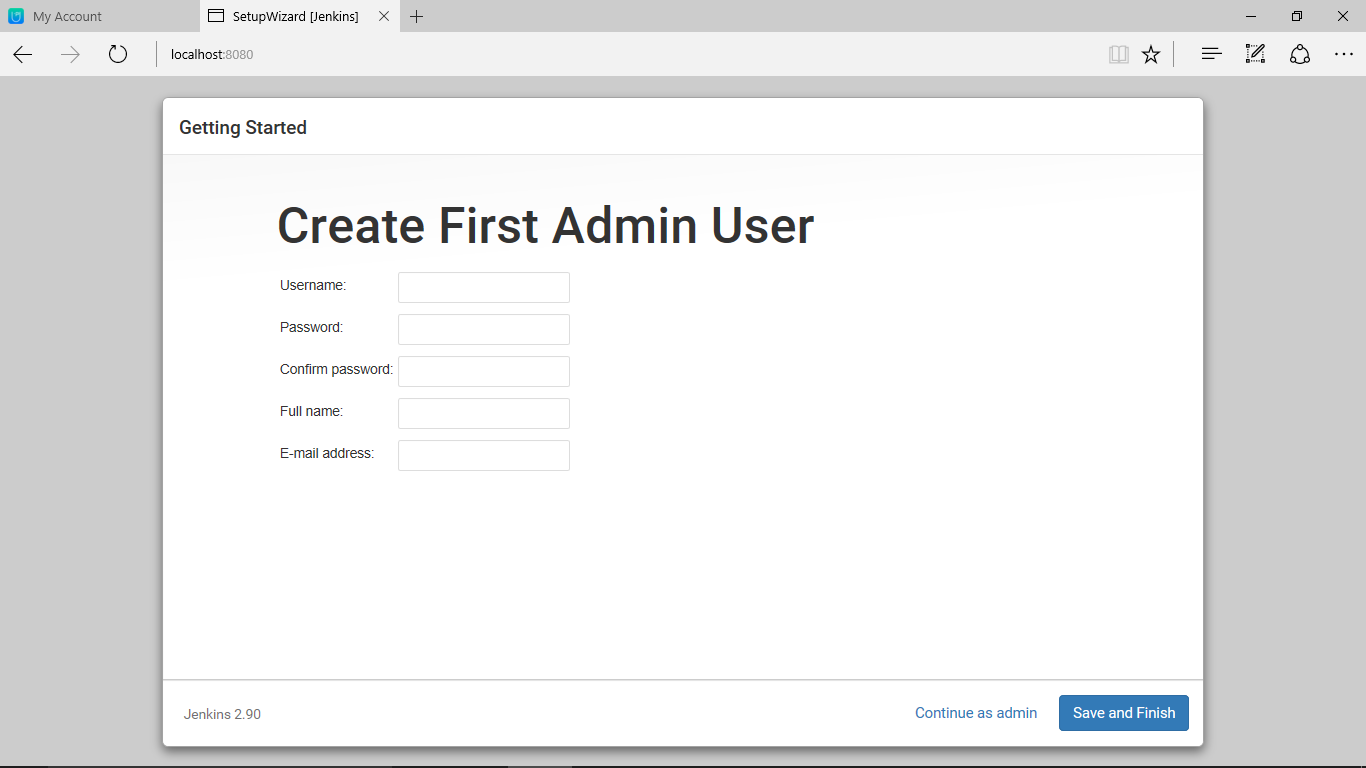

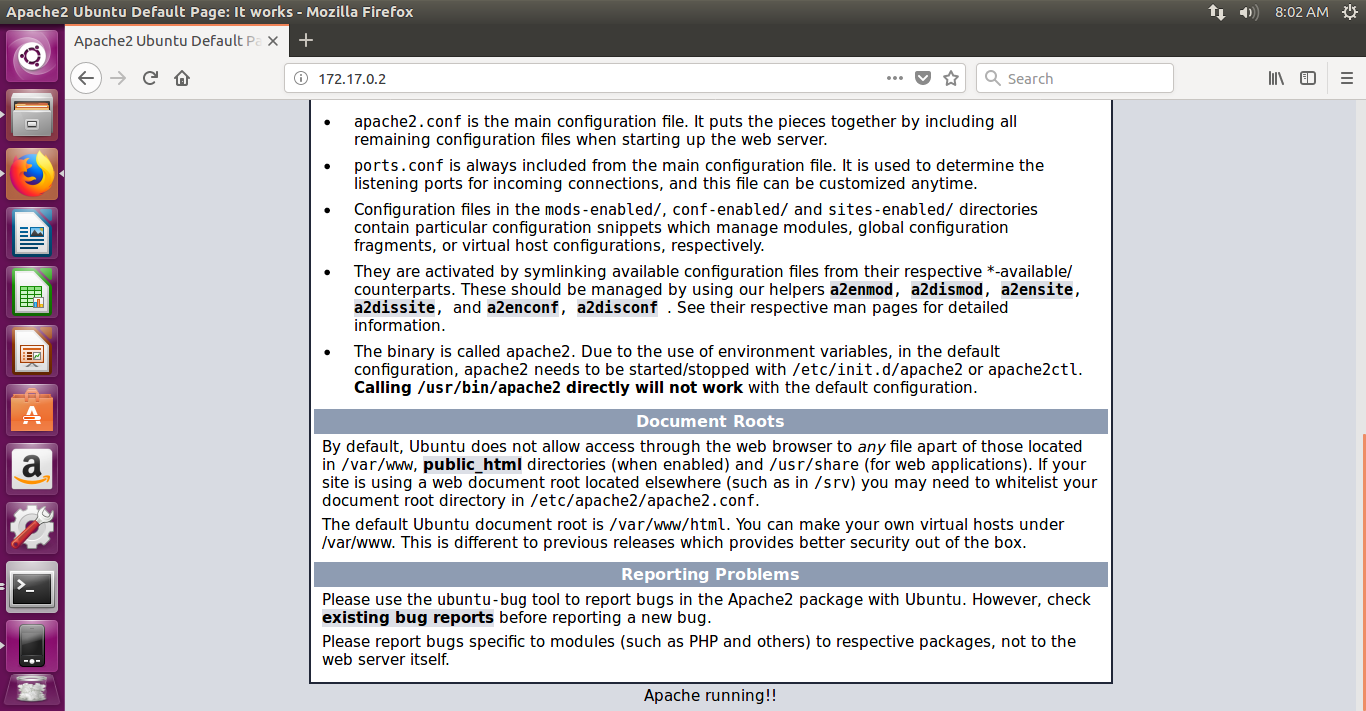

Step 5: Check the Apache home page with the container ip in ubuntu host machine’s Firefox browser:

Now I want to go to my ubuntu host cloud machine and use the firefox browser to access the apache2 page. Let me try. Yes it is running well with ip address: 172.17.0.2, as a proof you can see the below images:

It is a great work we have done! we proved the container networking can be done well with docker containers.

From the ubuntu cloud host machine we have seen the above screenshot from apache2 web page. Please check the web page bottom message ‘Apache running!! ‘. This is the message given through ‘echo’ command in the dockerfile.

If you want to stop the service you can use the below command:

=============== Stopping apache2 sever =====>

root@6df11fd4bbff:/# service apache2 stop

* Stopping Apache httpd web server apache2 *

root@6df11fd4bbff:/# service apache2 status

* apache2 is not running

root@6df11fd4bbff:/#

==============================>

Now, check your browser. You should get the message as “Unable to connect”.

You need to restart as below to run the web page:

root@6df11fd4bbff:/# service apache2 start

* Starting Apache httpd web server apache2 AH00558: apache2: Could not reliably determine the server’s fully qualified domain name, using 172.17.0.2. Set the ‘ServerName’ directive globally to suppress this message

root@6df11fd4bbff:/# service apache2 status

* apache2 is running

root@6df11fd4bbff:/#

=================== Restarted apache2 ============>

At this point, I want to stop this session.

In the next session, we will see some more examples with dockerfile usage to build containers.